AI agents' integration with Integrated Development Environments (IDEs) is already part of the usual workflow. Most software engineers use AI auto-completion tools like GitHub Copilot, and many use "vibe coding."

AI agent instructions are commonly seen in many projects. We often find files like AGENT.md, AGENTS.md, CLAUDE.md, and others. These files usually contain instructions for AI agents on how to interact with the project, what tools to use, and any specific guidelines. For example, they might define how to use the codebase, which libraries are utilized, what the project goals are, and so on.

Ideal instructions should allow an AI agent to complete complex tasks autonomously, such as: "I need a new feature that lets users add commands to text pages on the website." Ideally, the AI agent should have all the necessary information, such as "how to add new API endpoints," "how to add new database models," "how to support a database migration," and "how to link the UI to the backend."

However, I haven't seen truly effective working instructions. Usually, all this information is too vast for a single instruction file. If we describe every detail, this information will overload the AI agent's context.

We must remember that an AI agent's instructions are sent to the Large Language Model (LLM) along with every user prompt. Instructions that are too long distract the model's attention because they always contain everything, not just what's relevant at the moment.

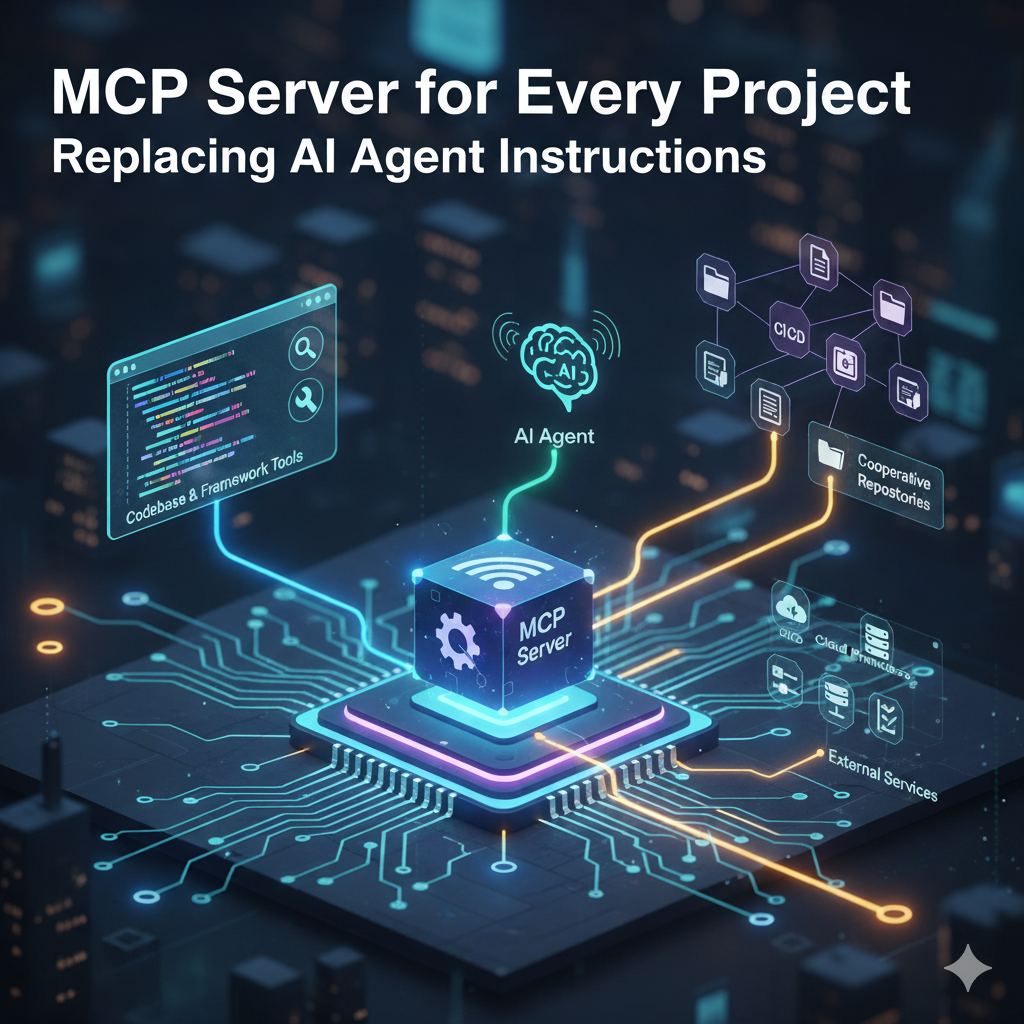

Solution: A Custom MCP Server for Every Code Project

And here's the idea: why not create a custom Model Context Protocol (MCP) server for every project? This can replace AI agent instruction files and offer much greater flexibility.

Before going further, if you're not familiar with the MCP protocol, I recommend reading the official documentation: https://modelcontextprotocol.io/docs/getting-started/intro.

There are several advantages to using an MCP server for every project:

- Dynamic Instructions: An MCP server can provide dynamic instructions upon request from the LLM. It could support a tree of knowledge and provide only the relevant information for the current task. The LLM will decide which "article" to read, and the MCP server will provide only that specific content.

- Access to Framework Tools: Many software frameworks have their own tools for working with the codebase. For example, Django has management commands to create models, run migrations, start a development server, and so on. The MCP server can expose these tools to the LLM, allowing it to use them directly.

- Project-Specific Logic: The MCP server can implement project-specific logic, such as understanding the architecture, coding conventions, and best practices. This helps the LLM generate consistent code. For instance, the MCP server can have an

add_new_feature tool. If we know that a new feature in our project means adding an "API router with a default endpoint," a "database model," "unit tests," and so forth, the MCP server can implement all this logic, so the LLM simply calls this single tool.

- Additional Tools: Many projects have their own tools, such as linters, formatters, test runners, package builders, and "GUI builders." The MCP server can expose these tools to the LLM, allowing it to use them directly. We can prompt the LLM with commands like "run tests," "build package," or "format code," and the MCP will execute the project's specific tools.

- Integration with Services: Many projects are integrated with external services, like CI/CD pipelines, cloud providers, monitoring tools, and project management tools. The MCP server can implement tools to interact with these services, enabling the LLM to manage deployments, monitor performance, and handle incidents directly from the codebase. Note: often these services have their own MCP servers, so having a custom tool in a project's MCP might seem redundant. However, I see benefits in having a unified interface for all project-related tools and services, which I'll explain below.

- Connection to Cooperative Repositories: Many projects have related repositories, such as documentation sites, design systems, shared libraries, and microservices. The MCP server can implement tools to interact with these repositories, allowing the LLM to access and update related resources seamlessly.