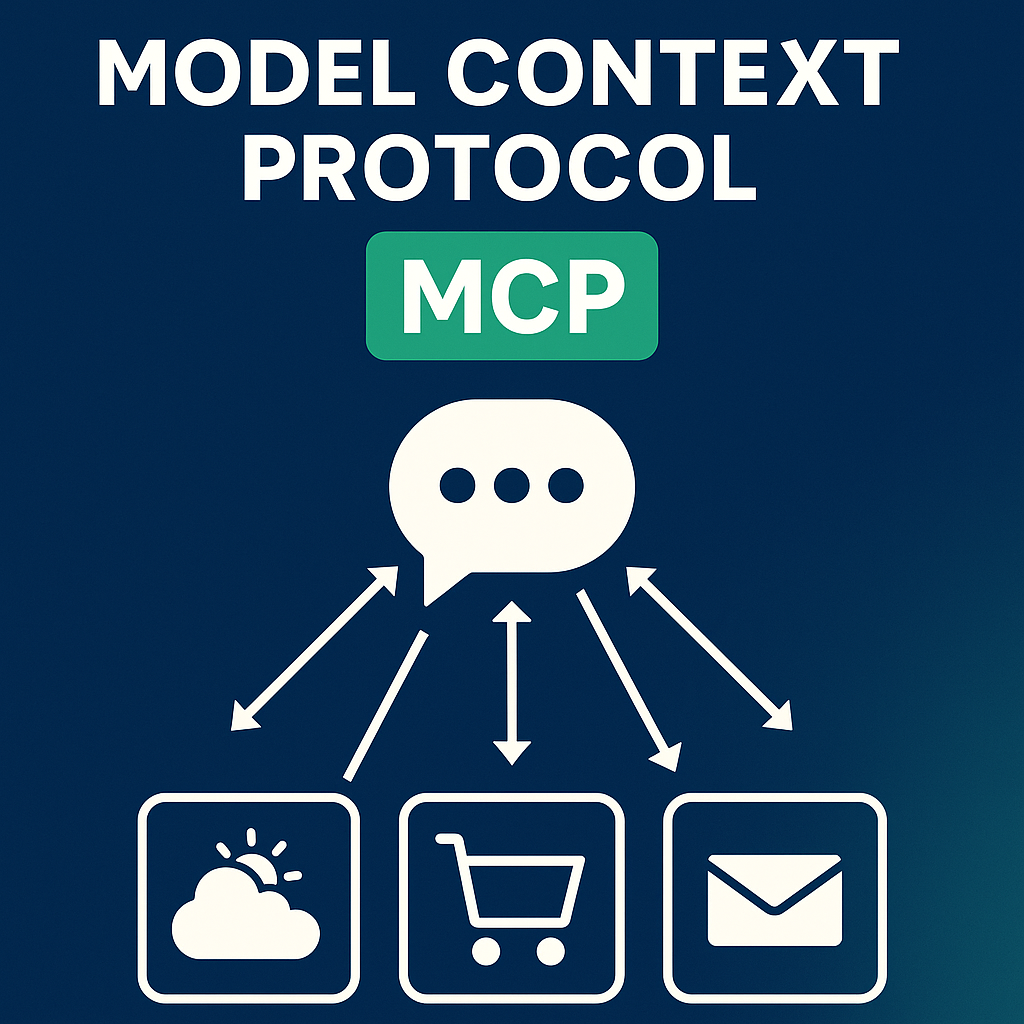

As large language models (LLMs) find real-world use, the need for flexible ways to connect them with external tools is growing. The Model Context Protocol (MCP) is an emerging standard for structured tool integration.

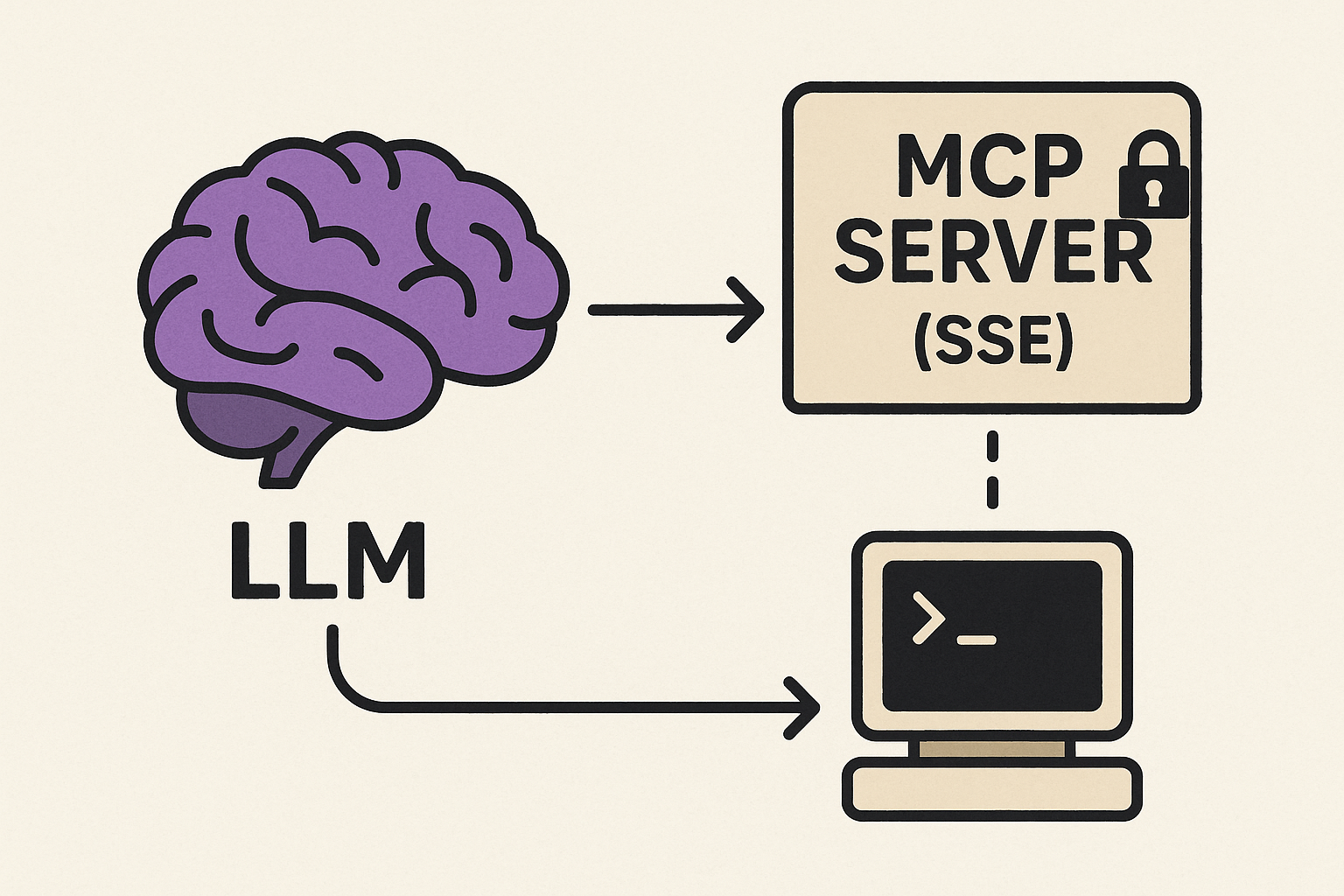

Most current tutorials focus on STDIO-based MCP servers (Standard Input/Output), which must run locally with the client. But MCP also supports SSE (Server-Sent Events), allowing remote, asynchronous communication over HTTP—ideal for scalable, distributed setups.

In this article, we'll show how to build an SSE-based MCP server to enable real-time interaction between an LLM and external tools.

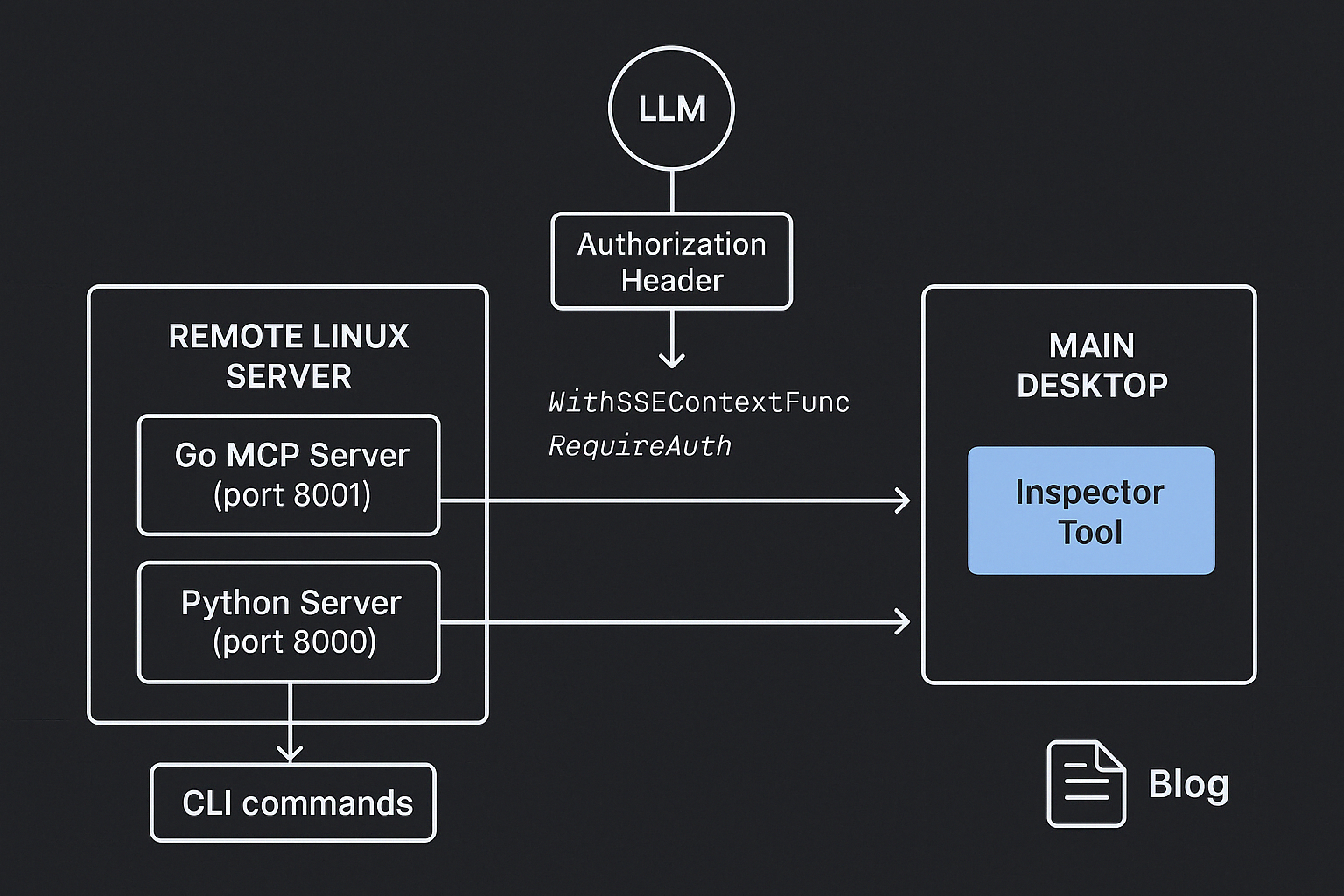

For this example, I've chosen the "Execute any command on my Linux" tool as the backend for the MCP server. Once connected to an LLM, this setup enables the AI to interact with and manage a Linux instance directly.

Additionally, I'll demonstrate how to add a basic security layer by introducing authorization token support for interacting with the MCP server.