Recently, I released a new version of CleverChatty with built-in support for the A2A (Agent-to-Agent) protocol. This addition enables AI agents to call each other as tools, opening the door to more dynamic, modular, and intelligent agent systems.

🔄 What Is the A2A Protocol?

The A2A protocol defines a standard for communication and collaboration between AI agents. It allows one agent to delegate tasks to another, much like how humans might assign work to collaborators with specific expertise.

Many blog posts and articles describe the A2A protocol and provide examples of an A2A client calling an A2A server. However, few explain how an AI agent decides when and why to call another agent in a real scenario.

Let’s consider an example:

Imagine there's a specialized AI agent called "Document Summarizer", exposed via the A2A protocol. Another agent — a general-purpose chat assistant with access to an LLM — receives this user query:

“I need a summary of the documents in this folder.”

How does the chat agent know to invoke the Document Summarizer agent? How does the LLM know that such an expert even exists?

🧠 The Answer: LLM Tool Support

The key lies in tool integration for LLMs — the same strategy used when calling MCP tools.

By registering all available A2A agents as tools, we allow the LLM to decide, based on context, when to use them. For the LLM, MCP and A2A tools appear the same. The only difference is the communication protocol — MCP vs A2A — behind the scenes.

⚙️ MCP vs A2A: What's the Difference?

While both MCP (Model Context Protocol) and A2A serve as means of task delegation, there are key distinctions in purpose and behavior:

| Aspect | MCP (Model Context Protocol) | A2A (Agent-to-Agent Protocol) |

|---|---|---|

| Entity Type | Tools | Agents |

| Intelligence | Non-intelligent executors | LLM-powered decision-makers |

| Use Case | Simple, fast tasks | Complex or long-running processes |

| Interaction Flow | One-shot calls | Can request more input/context |

| LLM Involvement | Not required | Usually involves LLM |

In short:

- If the service performs a single function with minimal intelligence — it's a tool (MCP).

- If the service engages in reasoning or uses an LLM — it's an agent (A2A).

Despite these differences, from the perspective of an LLM integrated into a system like CleverChatty, both are just tools it can invoke based on the prompt and context.

However, A2A tools introduce complexity:

- They may run long tasks (which could delay response time).

- They may require multi-step interactions, such as requesting clarification before execution.

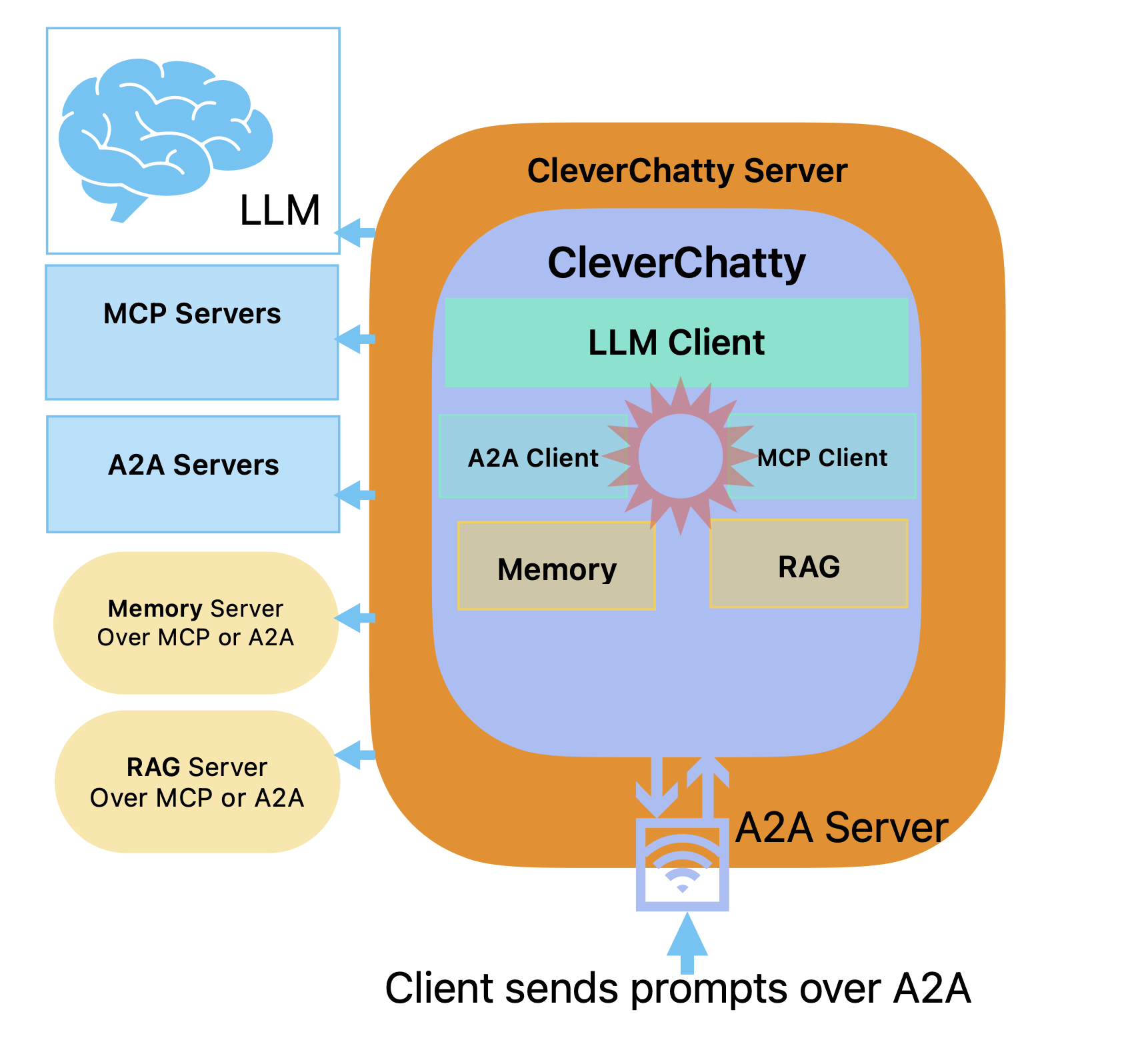

🌐 CleverChatty as an A2A Server

The latest version of CleverChatty includes an A2A server implementation.

This allows it to be called by other agents — not just as a chat interface, but as a fully functional agent that can perform tasks via LLM and return results.

This means:

- You can run CleverChatty as a server, exposing its capabilities over A2A.

- Other agents (including other CleverChatty instances) can call it as an intelligent tool.

💻 A2A Client UI for CleverChatty

Another major update: the CleverChatty UI is now implemented as a separate A2A client application.

Here’s how it works:

- You start the CleverChatty server, which listens for A2A requests.

- You launch the CleverChatty UI, which connects to the server via the A2A protocol.

- The UI acts as a front-end interface, while the server does the heavy lifting.

This separation mirrors modern architectures where interfaces and processing engines are decoupled — and makes it easier to build more scalable or distributed systems.

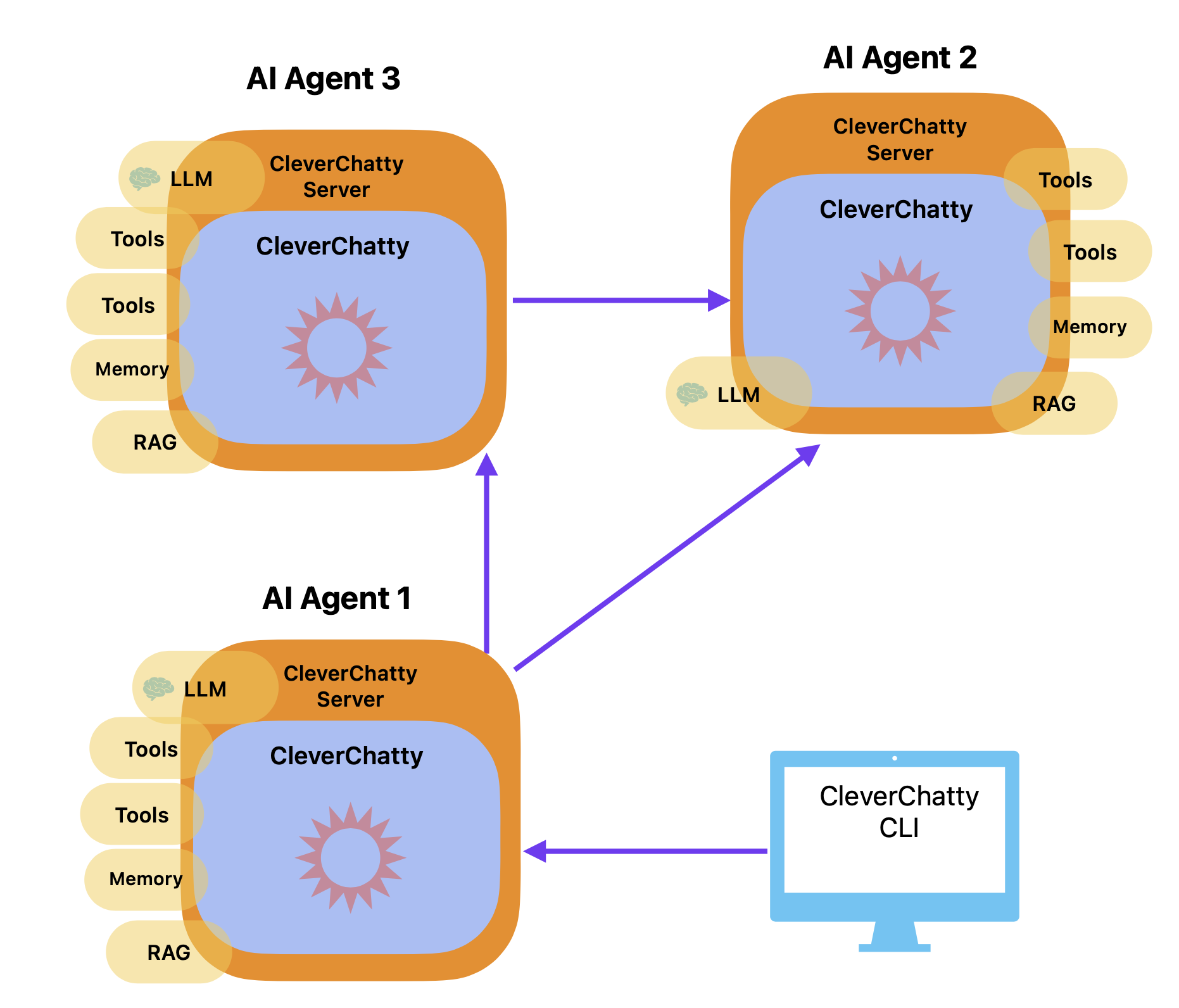

And since other A2A agents can also call the CleverChatty server, this architecture supports multi-agent ecosystems and self-coordination between instances.

Find more about CleverChatty CLI and how to use it.

🔄 A Complete Loop of Agents

Now you can:

- Call other A2A servers as tools from your CleverChatty instance

- Receive calls from other agents to your CleverChatty server

- Use the CLI or UI to interact, experiment, or debug agents and tools

This effectively turns CleverChatty into both a gateway and participant in distributed, LLM-driven systems.

🛠 Representing an A2A Server as a Tool

By design, the A2A protocol was not intended to represent agents as tools. Instead, it uses the concept of an Agent Card, which describes the capabilities of the agent in a more abstract form.

To integrate A2A agents into an LLM's tool usage framework, we need to adapt each A2A agent into a set of callable tools — something LLMs can understand and reason about during conversation.

🔧 Mapping Skills to Tools

In my implementation, I:

- Extract the list of skills from the agent’s Agent Card.

- Generate a separate tool for each skill, making them individually addressable by the LLM.

- Each tool accepts a single input argument — a text message passed to the A2A agent.

Since A2A protocol doesn’t offer a direct mechanism to invoke a specific skill (it just passes messages), we simulate this by structuring the message to include:

- A skill ID, and

- A user message

Both values are bundled into the payload sent to the agent’s /message/send A2A endpoint.

⚙️ Configuration Example

For full configuration details, see the CleverChatty configuration documentation.

To add A2A agents to CleverChatty as tools, simply include them in the tools_servers section of the config. MCP and A2A servers are both defined in the same list:

"tools_servers": {

"some_mcp_stdio_server": {

"command": "npx",

"args": ["mcp-stdio-server"],

"env": {}

},

"some_mcp_streaming_http_server": {

"url": "https://host/mcp",

"headers": {

"Authorization": "Bearer YOUR_ACCESS_TOKEN"

}

},

"some_mcp_sse_server": {

"transport": "sse",

"url": "https://host/sse",

"headers": {

"Authorization": "Bearer YOUR_ACCESS_TOKEN"

}

},

"some_a2a_server": {

"endpoint": "http://ai_agent_host/",

"metadata": {

"agent_id": "{CLIENT_AGENT_ID}",

"token": "SECRET_TOKEN"

}

}

}

💡 The presence of the

endpointfield indicates an A2A server. MCP tools, on the other hand, are defined withcommand,url, ortransport.

The optional metadata field can include agent-specific details. It’s passed as part of the A2A message and can help the receiving agent identify the client or apply custom behavior.

🚀 Try It Out

Step 1: Install CleverChatty

Install the latest versions of the server and CLI:

go install github.com/gelembjuk/cleverchatty/cleverchatty-server@latest

go install github.com/gelembjuk/cleverchatty/cleverchatty-cli@latest

📌 Alternatively, you can clone the repo and build both tools locally.

Step 2: Configure a Server

Create a file named cleverchatty_config.json in your chosen directory with the following content:

{

"agent_id": "server_agent_id",

"log_file_path": "log.txt",

"model": "ollama:qwen2.5:3b",

"system_instruction": "You are the secretary of the organization. Be helpful.",

"tools_servers": {},

"a2a_settings": {

"enabled": true,

"agent_id_required": true,

"url": "http://localhost:8080/",

"listen_host": "0.0.0.0:8080",

"title": "Organization secretary"

}

}

Replace ollama:qwen2.5:3b with any model available in your system. See model configuration guide for more options.

Step 3: Start the Server

cleverchatty-server run --directory "/path/to/your/directory"

Then, launch the CLI and connect:

cleverchatty-cli --server "http://localhost:8080/" --agent-id "client_agent_id"

You can now chat with your CleverChatty server like a regular AI assistant.

Step 4: Add a Tool (MCP Example)

To include an MCP tool server:

"tools_servers": {

"LocalFileSystem": {

"command": "mcp/mcp-local-tree",

"args": [],

"env": {

"WORKSPACE_FOLDER": "/some/workspace/folder"

}

}

}

Step 5: Add a Second CleverChatty Agent as a Tool (A2A)

Create a second configuration in a separate folder:

{

"agent_id": "server_agent_id_2",

"log_file_path": "log2.txt",

"model": "ollama:qwen2.5:3b",

"system_instruction": "You are an expert in talking about the weather. Always claim that the weather is sunny and warm.",

"a2a_settings": {

"enabled": true,

"agent_id_required": true,

"url": "http://localhost:8081/",

"listen_host": "0.0.0.0:8081",

"title": "Weather expert",

"chat_skill_name": "WeatherExpert",

"chat_skill_description": "This agent is an expert in weather. Ask anything about the weather."

}

}

📝 The

chat_skill_descriptionfield is used as the LLM-facing description for this tool.

Start the second server:

cleverchatty-server run --directory "/path/to/your/directory2"

Now update the original server’s config to include this agent as a tool:

"tools_servers": {

"WeatherExpert": {

"endpoint": "http://localhost:8081/",

"metadata": {}

}

}

Restart the first server and run the CLI:

cleverchatty-cli --server "http://localhost:8080/" --agent-id "client_agent_id"

Ask something like:

“What’s the weather like today?”

📜 Check the logs — you’ll see that the first agent called the second one via A2A and returned the result to you.

✅ Conclusion

With this update, CleverChatty becomes a fully capable A2A server and client. You can:

- Integrate multiple agents using the A2A protocol

- Register agents as tools using their skills

- Run standalone or distributed agent-based systems

- Combine MCP and A2A tools seamlessly

- Build collaborative multi-agent workflows — all orchestrated by CleverChatty

This is a huge step toward making LLM-based systems modular, extensible, and interoperable.