I continue to explore one of my favorite topics: how to make AI agents more independent. This blog is my way of organizing ideas and gradually shaping a clear vision of what this might look like in practice.

The Dream That Started It All

When large language models (LLMs) and AI chat tools first started delivering truly impressive results, it felt like we were entering a new era of automation. Back then, I believed it wouldn’t be long before we could hand off any intellectual task to an AI—from a single prompt.

I imagined saying something like:

"Translate this 500-page novel from French to Ukrainian, preserving its original literary style."

And the AI would just do it.

But that dream quickly ran into reality. The context window was a major limitation, and most chat-based AIs had no memory of what they'd done before. Sure, you could translate one page. But across an entire novel? The tone would shift, the style would break, and continuity would be lost.

Early Attempts, Disappointing Results

As a developer, my instinct was to automate: “If it can do one page, I’ll just loop through them all using the API.”

Technically, this worked—but practically, it was a mess. Each page sounded like it was written by a different person. There was no consistent voice, and the narrative flow broke down.

Even in 2025, we’re still not quite there. You can translate a novel page by page. But maintaining a coherent, stylistically faithful voice across hundreds of pages still requires more than just looping prompts.

What Might Help?

Maybe tools like Cursor (or something like it) will evolve into the solution: a long-form writing environment powered by AI, where you can define the target style and let it handle things step by step. But even that still assumes you need to manually steer the process.

My ideal AI assistant wouldn’t need that kind of micromanagement.

Why should I have to tell it how to preserve the style? Shouldn’t it be smart enough to figure that out on its own?

The Real Question

So here’s the core idea I keep coming back to:

How can we build AI assistants that can complete long, complex tasks without constant human guidance?

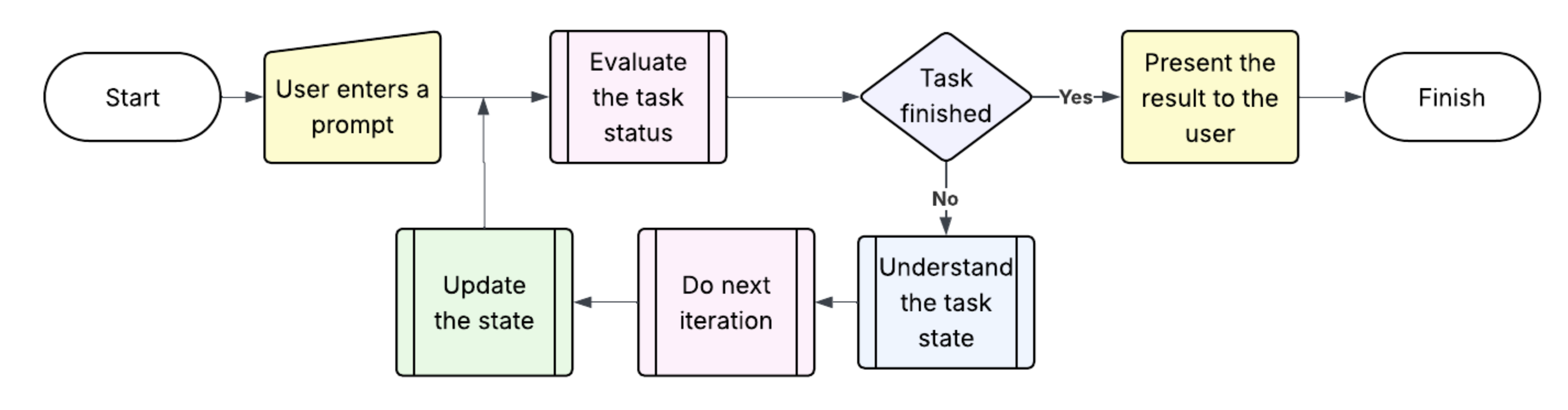

It’s clear some kind of loop is involved—but not just a simple loop. It needs to plan, adapt, verify, and respond to failure.

New Possibilities in 2025

I’m revisiting this idea because in just the past six months, new technologies have emerged that make this kind of autonomy more feasible.

There’s growing interest in MCP servers, agent-to-agent (A2A) protocols, retrieval-augmented generation (RAG), and memory tools. Some see MCP as just “tool support,” but the broader picture shows real momentum toward AGI-like systems.

Let’s call these building blocks what they are: The Components of AGI.

And while the LLMs themselves are still mostly in the hands of the "rich guys," everything else—MCP, RAG, memory, and agent frameworks—is freely available.

So why not try building a "mini-AGI"—a system capable of solving complex problems over time without needing you to babysit it?

A Loop with a Brain

At a high level, the basic workflow looks simple:

- Receive a task

- Plan how to do it

- Execute the plan, step by step

- Verify each step

- Repeat or fix if something fails

Of course, implementing anything beyond steps 1 and 5 is still a serious challenge. But this is exactly what many developers and researchers are tackling right now.

How Do Humans Solve Long Tasks?

If we want AI to handle long tasks, it makes sense to first look at how we do it.

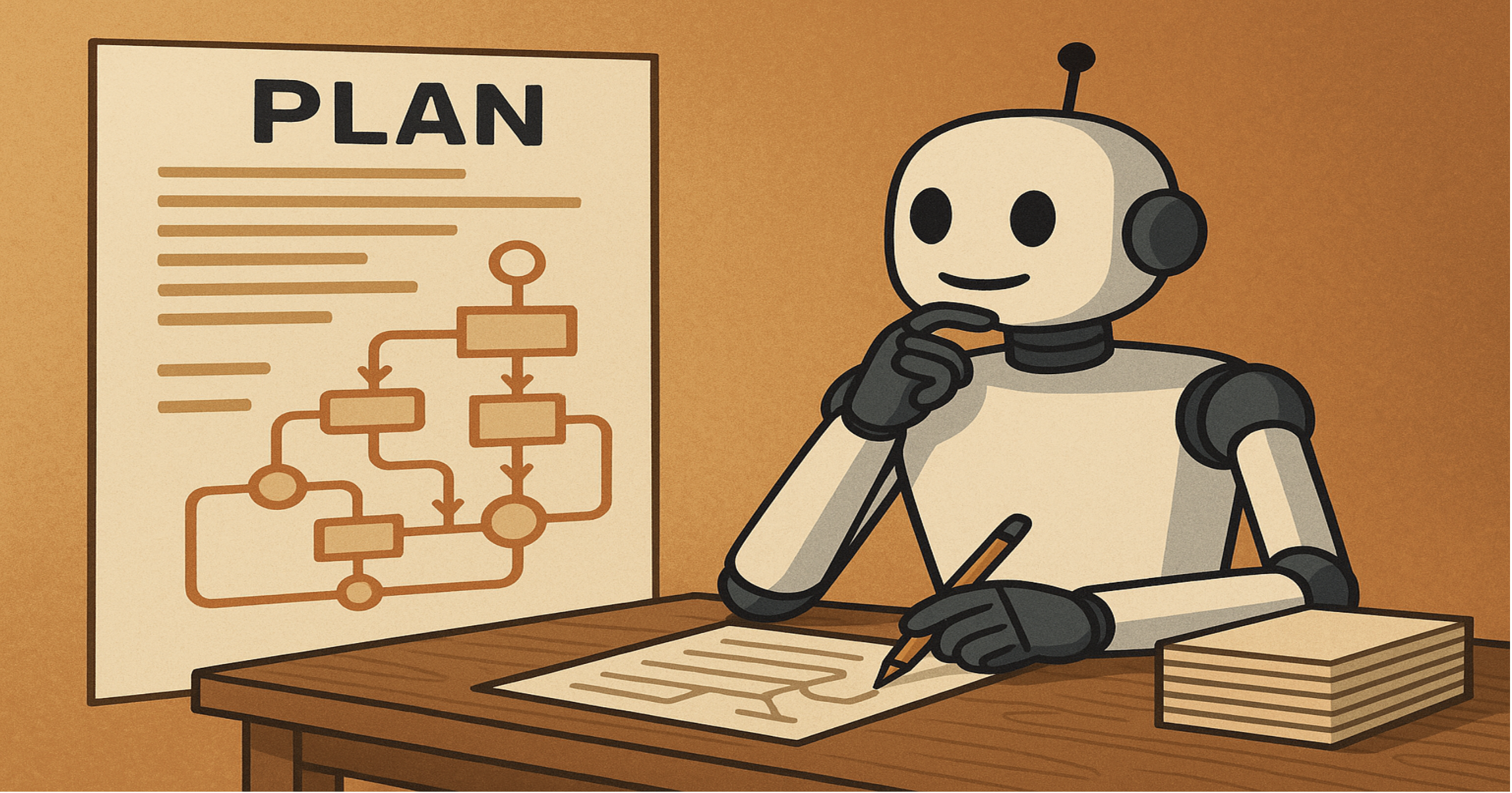

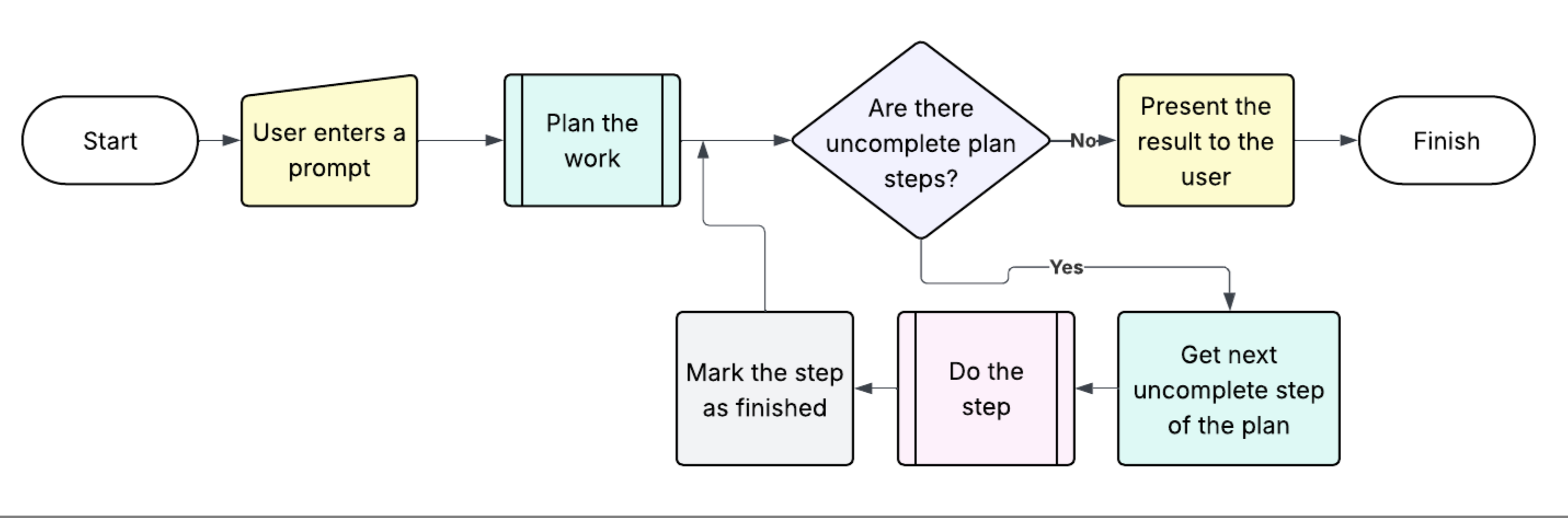

The answer is planning. Humans break down complex goals into smaller, manageable steps. We set priorities, consider dependencies, and build a mental map before we even start working.

Plan the work. Then execute the plan.

Let’s imagine a simplified diagram:

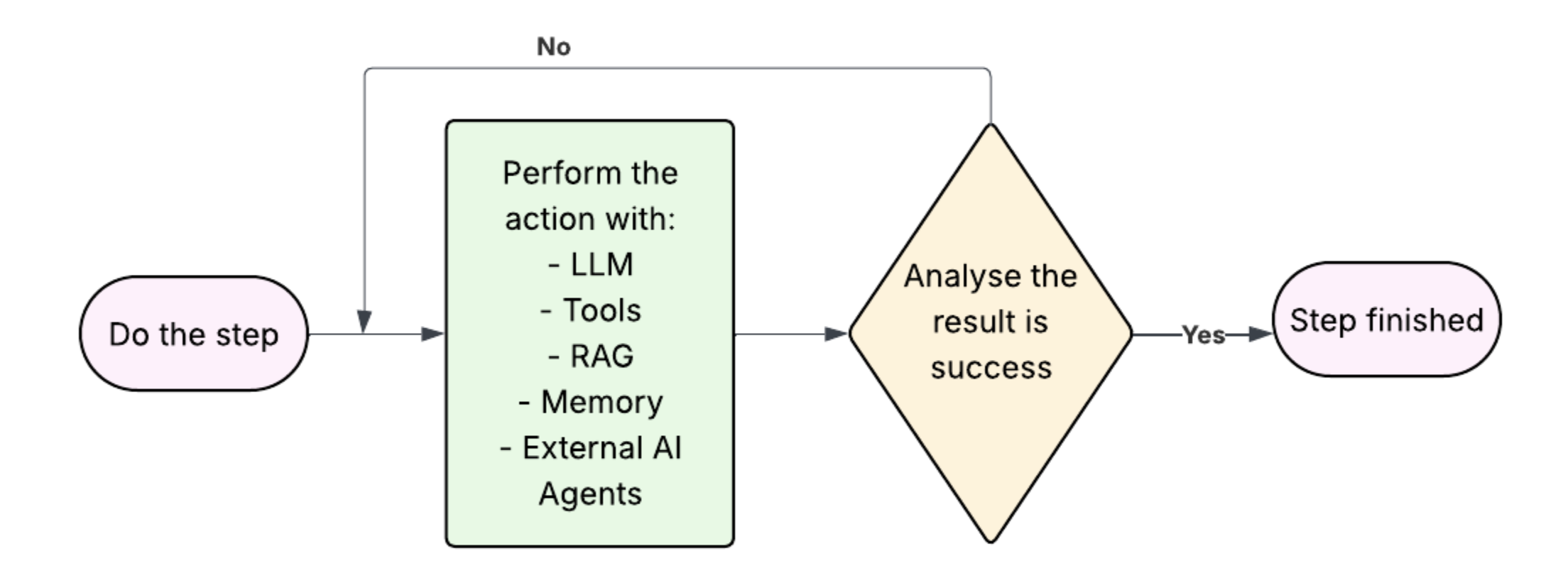

Each step must be:

- Small enough to fit into a single prompt + output

- Supported by tools, RAG, or helper agents

- Verified before continuing

If verification fails, the system must either retry, adapt, or exit gracefully.

Key Questions to Design For

-

What happens when a step fails? Do we retry from scratch? What needs resetting?

-

How do we avoid infinite loops? The system needs failure thresholds and fallback strategies.

-

Do we lose anything on failure? Can we preserve partial outputs, logs, or memory checkpoints?

-

How do we confirm correctness? Should we bring in a second LLM or validator agent? Can different tools review each other?

Planning: The Hardest Step

In our flowchart, “Plan the work” is just one box. But in reality, this step is dense.

It involves:

- Analyzing the task

- Breaking it down into self-contained subtasks

- Defining dependencies and tool usage

- Estimating what can fit in a context window

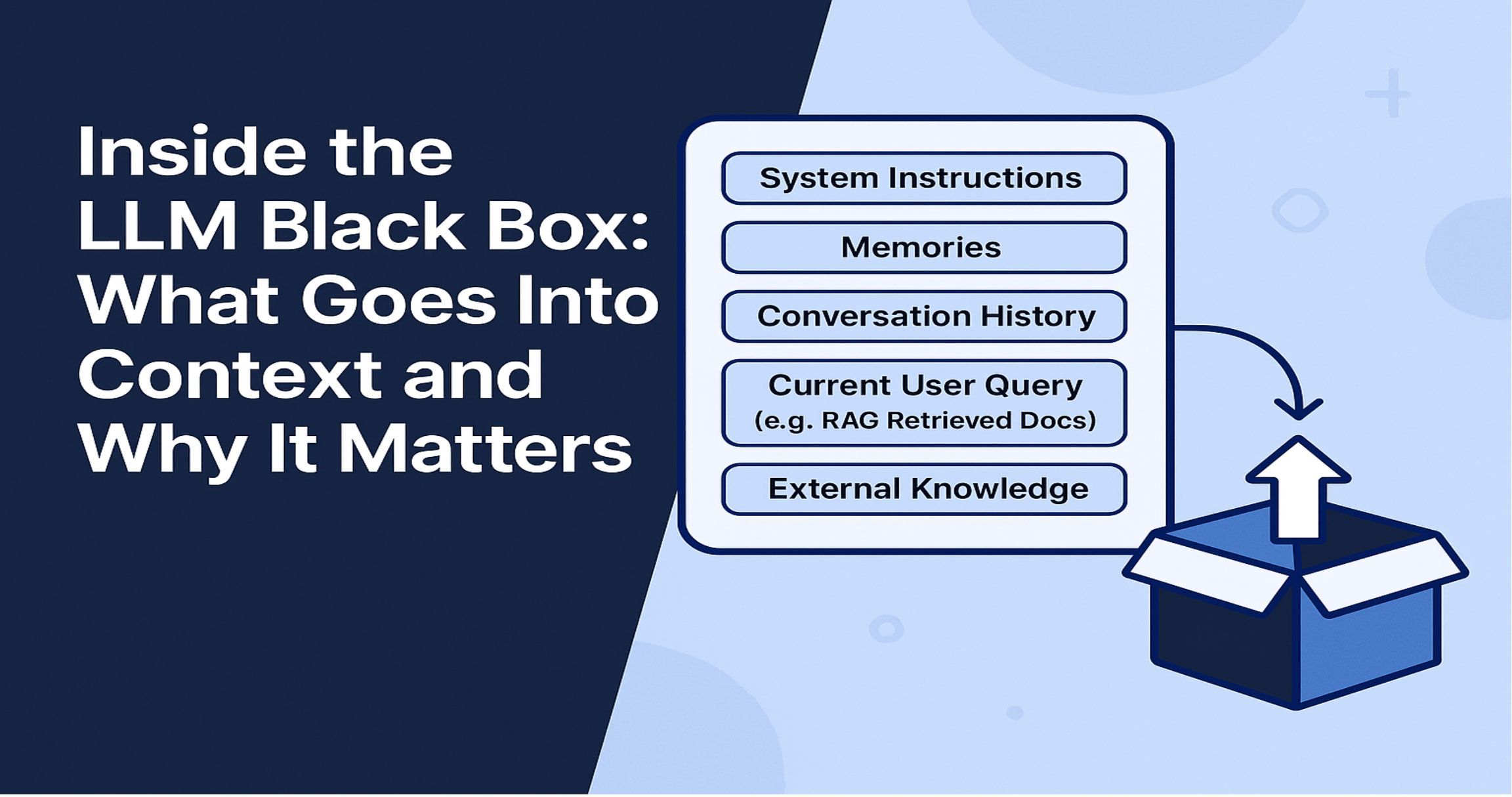

Context Window Is the Main Constraint

When evaluating subtasks, context length matters more than execution time. If all the required input, instructions, and tools can’t fit into the prompt window, the step will fail.

Planners must account for:

- RAG-pulled documents

- Tool call syntax

- System instructions

- Conversation history (for coherence)

Planning: How it can be done

There are many methods to create a plan. Some originally created for humans can be adapted for AI, other methods are more specific to AI.

As for me the most relevant method for a "beginner" is the Hierarchical Task Decomposition method.

Hierarchical Task Decomposition (HTD) — also known as Hierarchical Task Networks (HTNs) — is a method used in AI, robotics, and cognitive science to break down complex tasks into simpler, more manageable subtasks, recursively, until reaching primitive actions that can be executed directly.

It is a core technique in automated planning, agent architectures, and long-context LLM workflows.

Key Concepts of HTD

- Hierarchy

-

Tasks are organized in a tree-like structure:

- High-level goals at the root

- Subgoals as branches

- Executable atomic actions as leaves

-

Each node is either a compound task or a primitive task.

- Decomposition

-

High-level tasks are decomposed into sequences or sets of subtasks using methods (rules or templates).

-

Decomposition can be:

- Sequential

- Parallel

- Conditional

- Reusability

- Methods can be reused across different plans or contexts.

- Encourages modularity and abstraction.

- Planning with Methods

- Each compound task may have multiple possible decompositions (methods), depending on context.

- The planner chooses the one that fits current conditions and goals.

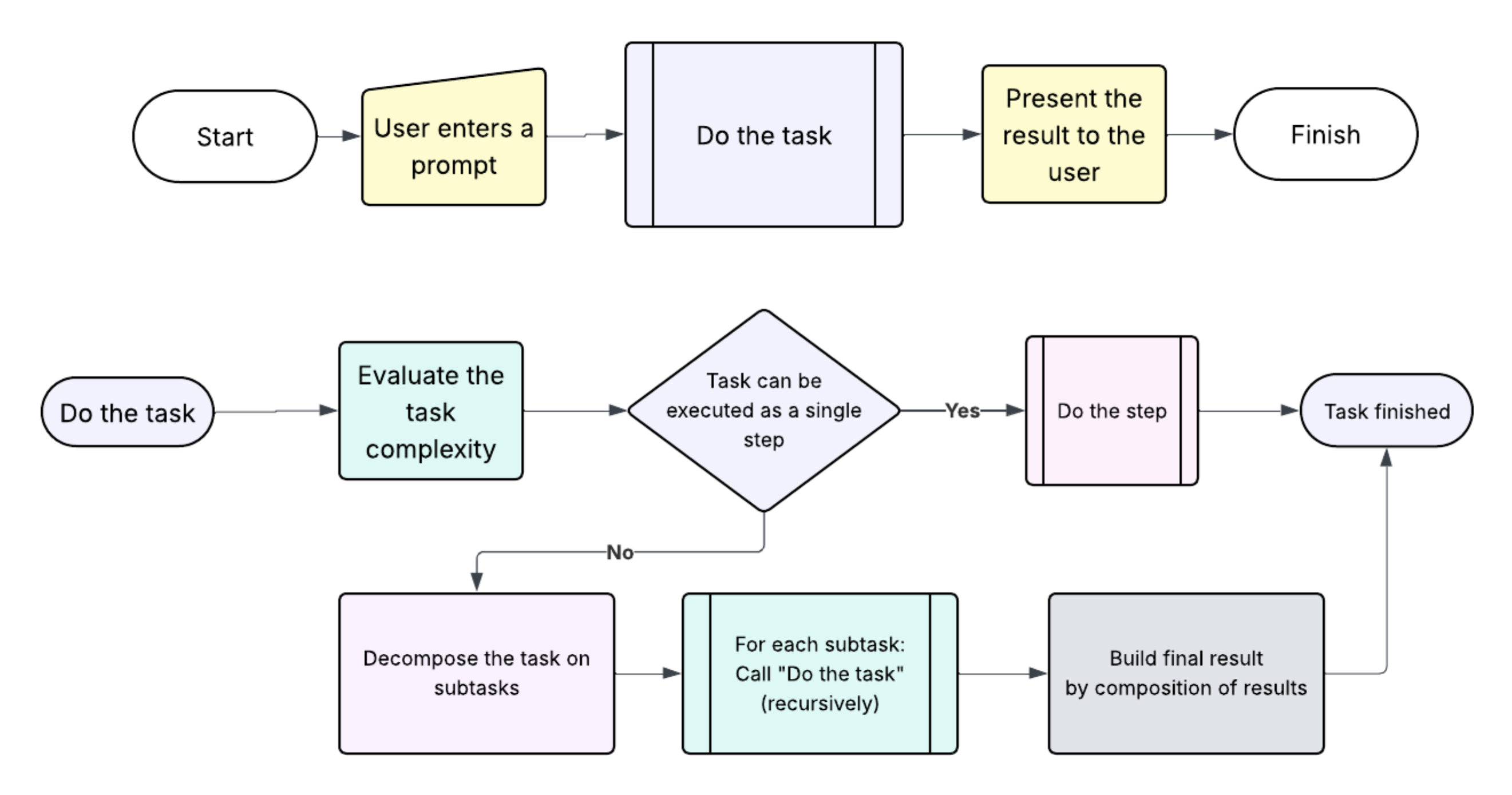

An Alternative: Recursive Decomposition

There's another way to handle complex tasks: don’t plan everything upfront.

Instead, follow a recursive approach:

- AI receives a task

- It checks: “Can I do this in one step?”

- If yes, it completes the task

- If no, it calls a Task Decompositor agent

- The task is broken into subtasks

- Each subtask is solved the same way, recursively

- Subtask results are composed into the final result

This creates a tree of subtasks, with each node representing a decision, an attempt, or a result.

This “decompose-as-you-go” model is more flexible than fixed planning and could be better suited for dynamic, unpredictable problems.

Choosing a Method

I haven’t yet settled on the best method for decomposing tasks—but ideally, I’d like to use a well-known, formalized approach that any capable LLM can recognize and apply.

The idea is simple: instead of crafting long, detailed prompts, we give the assistant a short system instruction like:

“Use the HTD method to decompose the task into subtasks.”

And the LLM knows what to do.

This is one of the core principles behind the project: the AI assistant should require minimal instructions. If we rely on shared, standardized methods, we can keep prompts concise while still enabling complex, structured behavior.

The Role of Tools (via MCP)

Tools play a crucial role in building more autonomous AI agents. They are the bridge between the AI and the external world—allowing it to read and write files, execute scripts, interact with APIs, and more. Through the MCP protocol, tools become structured, callable components that extend the agent's capabilities beyond text generation.

In the context of long-running tasks, tools typically serve two main purposes:

-

Task-Specific Tools: These are used to fulfill the actual goal of the task—for example, saving a translated chapter to a file or invoking an external API. The specific tools required depend on the nature of the task and should be selected during the planning phase.

-

Agent Support Tools: These are used to support the internal operation of the AI agent—such as saving its current state, storing intermediate steps, or retrieving plans from memory. This is usually a fixed, predefined set of tools that the assistant relies on for any long task, regardless of the domain.

By combining both types of tools, we enable agents not only to complete their assigned jobs but also to manage themselves effectively throughout the process.

Final Thoughts

We’re closer than ever to building AI systems that can truly operate without micromanagement. The missing piece isn’t raw power—it’s orchestration. With the right structure, tools, and verification processes, we can unlock a new level of independence in AI agents.

The key is to stop thinking in terms of a single magic prompt—and instead, build systems that think for themselves.