In this post, I want to explore an idea I’ve been experimenting with: common memory for AI agents. I’ll explain what I mean by this term, how such memory can be implemented, and why I believe it's worth exploring.

What Is Agent's “Common” Memory?

I’m not sure whether “common memory” is already a widely accepted term in the AI space, or even the most accurate label for the concept I have in mind — but I’ll use it for now until a better one emerges (or someone suggests one).

By common memory, I mean:

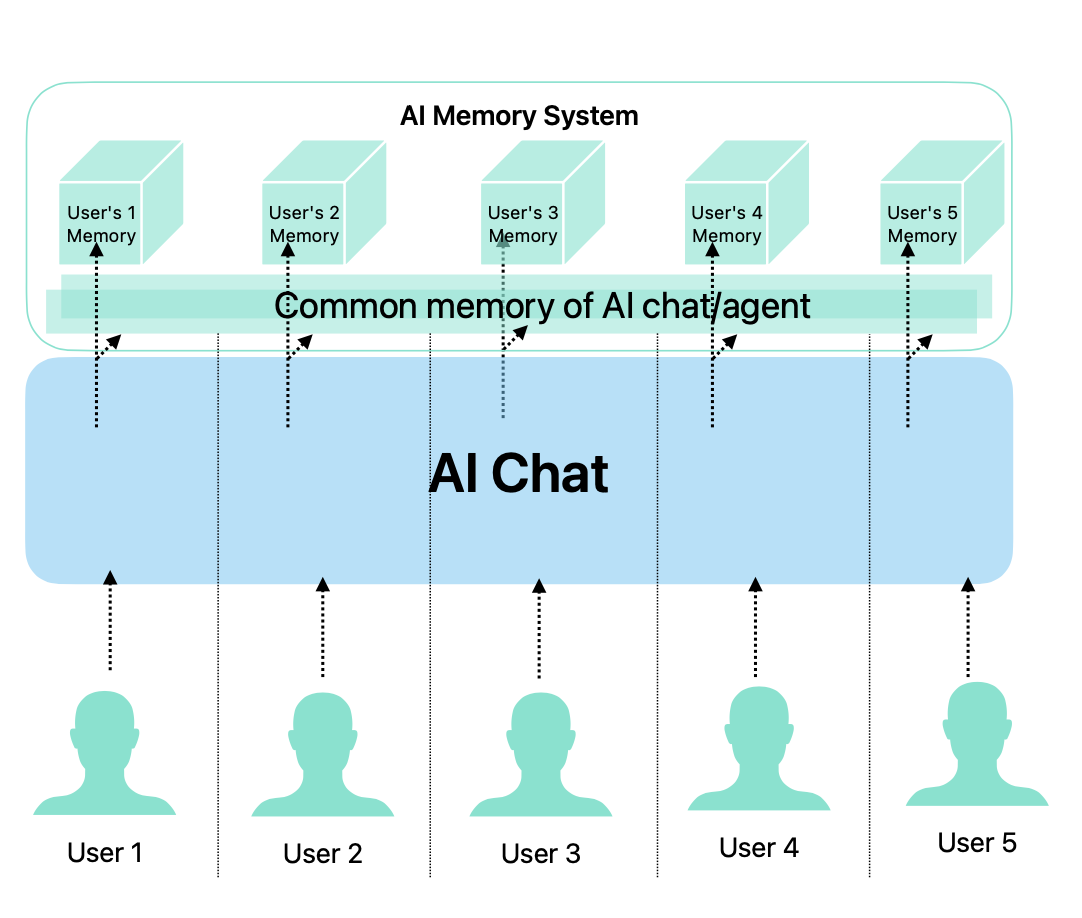

A shared repository of memories formed by a single AI agent from interactions with multiple other agents — including both humans and other AI agents. For eample, AI Chat can retain information learned from conversations with different users, and selectively reference that information in future interactions.

This is distinct from related terms:

- Shared memory usually refers to memory shared across different AI systems or agents — not across users of the same assistant.

- Collaborative memory comes closer, but often implies more structured cooperation and might be too narrow for what I’m describing.

So for now, I’ll stick with common memory to describe a memory system that allows an AI assistant to retain and selectively reference information learned across interactions with multiple users.

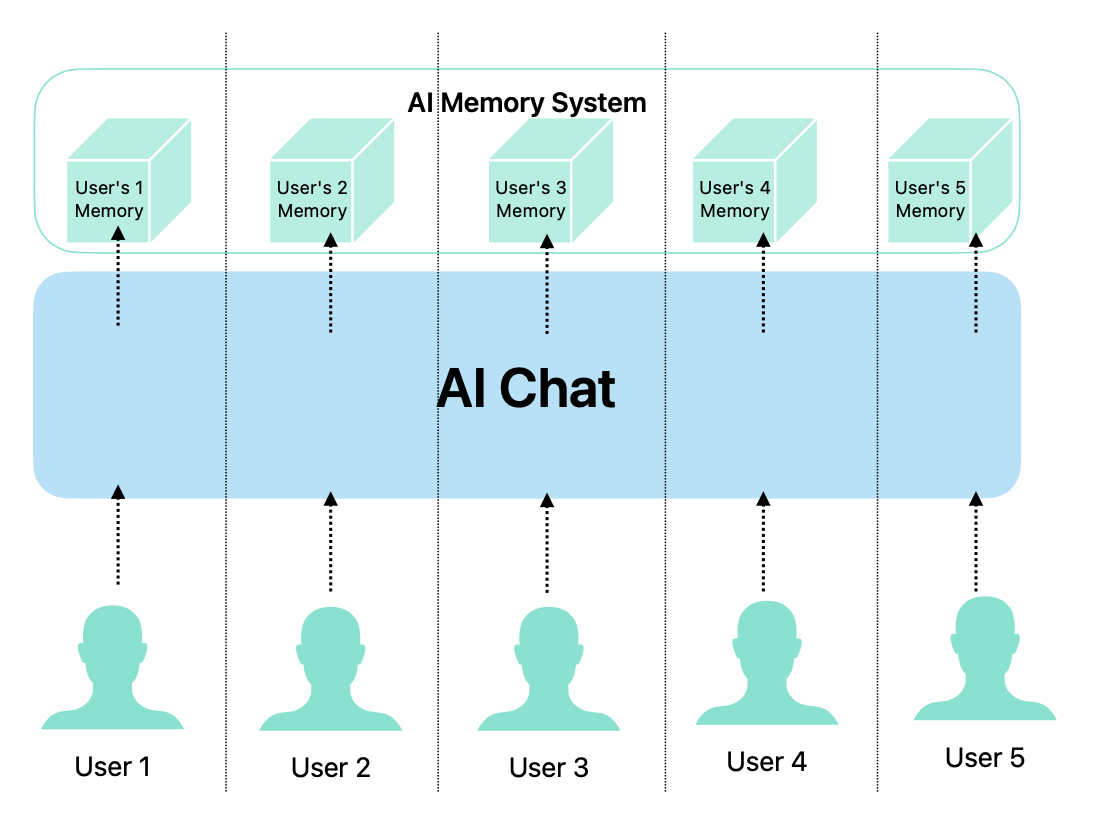

AI Chats do not use Common Memory

When we interact with AI chats like ChatGPT, they typically do not retain information across different users. Each conversation is isolated, and the AI does not remember past interactions with other users. This means that if you ask the AI about something you discussed with another user, it won’t have any context or memory of that conversation. Only the current user's context and history are considered.

Possibly, we do not need common memory in all AI chats especially publically available ones. For example, in a public chat like ChatGPT, it might not be appropriate for the AI to remember information from one user and share it with another due to privacy concerns.

But in context of an organization or a family or a closed group of users, common memory can be extremely useful. It allows the AI assistant to be more useful.

Why Common Memory?

Let’s start with a simple analogy. Replace the AI assistant with a human — say, an assistant named John working at a company.

John communicates regularly with 10 employees and occasionally interacts with other AI assistants. When John talks to his boss, Victoria, he remembers what she says. Later, when John speaks with another employee, Alice, he still remembers what Victoria said — but he doesn’t necessarily share all that information. Instead, he intelligently filters what’s relevant or appropriate to say, based on the context and the corporate relationships between people.

Now imagine replacing human John with an AI assistant named "John". We want him to function in a similar way — retaining useful information across conversations with multiple users, while carefully filtering what it shares.

A Practical Example:

- Alice interacts with the AI assistant John.

- She asks him to polish and send a report to Victoria.

- John replies, “Nice report, Alice!”

- Alice responds, “Thanks! Bob helped me write it.”

- AI John stores this fact in common memory.

- Later, when talking to Bob, John says:

- “Alice did a great job on the report. She mentioned you helped her — nice work!”

This kind of cross-user memory opens up powerful opportunities. But it also raises critical challenges:

- How do we store and retrieve this information reliably?

- How do we ensure that the assistant respects user privacy, role boundaries, and social context?

- How do we make the assistant “intelligent” enough to filter and personalize memory recall?

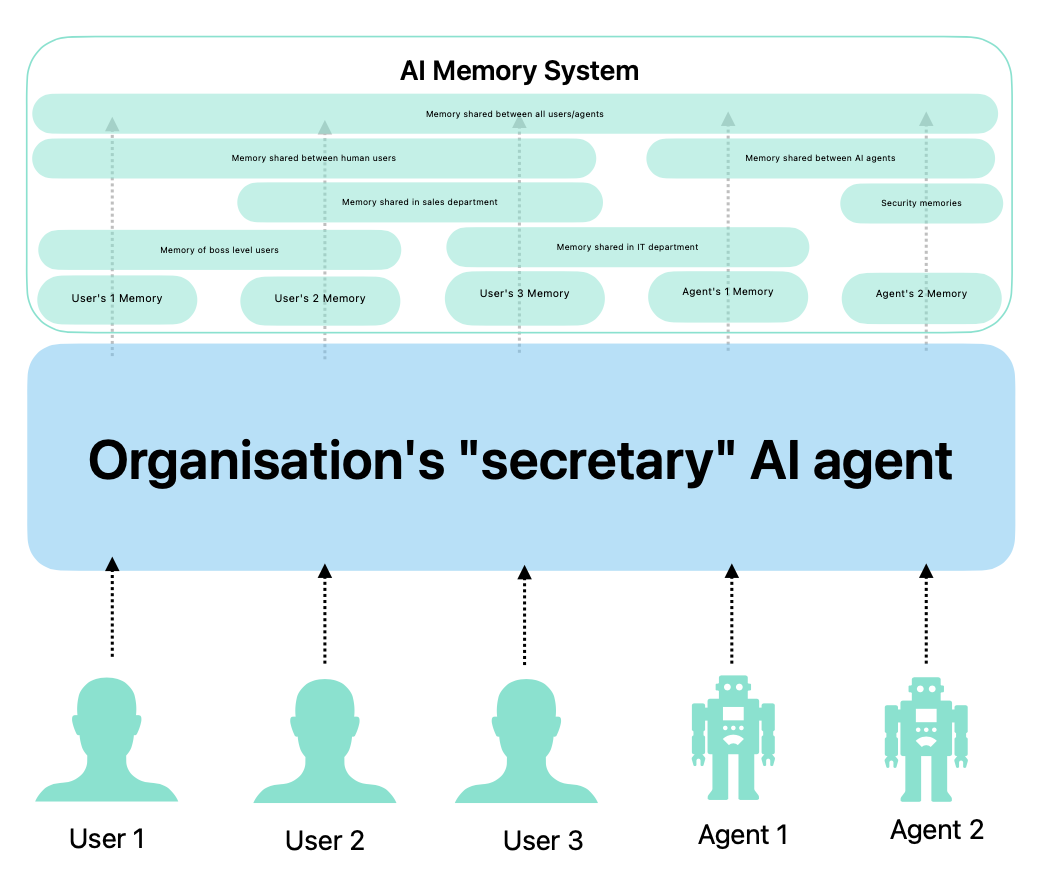

Common memory and multiple agents

There is a space for discussion about shared memory and communication with different people. However, this idea looks yet more interesting when we consider scenarios where our AI agent communicates not only with humans but also with other AI agents and with some tools.

Little more about communication with tools. Most of us heard about MCP (Model Context Protocol). It allows AI agents to use some tools. When needed AI agent can call a tool. But this protocol offers also reverse communication - a tool can notify an agent about something. So, tools integrated with AI agents are also a source of input.

- Every human communication with AI agent is a channel of a data input/output.

- Every AI agent communication with other AI agents (over A2A protocol or similar) is a channel of a data input/output.

- Every tool communication with AI agent is a channel of a data input/output.

All that inputs form a common memory of an AI agent.

This memory should not only store a data but also a channel from where it comes and some additional data about relationships between channels. So, AI agent can use all of this to form a context of a conversation for each channel securely.

Possible Implementation

I see two main approaches to implementing common memory:

-

Labeling of Memories: When input is received from a channel, the AI agent's memory system extracts useful information and labels it with metadata specifying which channels or groups of channels are allowed to access it in future interactions.

-

Real-Time Contextualization: The memory system stores summaries and facts from all inputs, maintaining an association with the originating channel. When the AI agent needs to recall memory, it analyzes the current context and retrieves relevant information based on the requesting channel and its relationship to others.

The first approach is a kind of input preprocessing, while the second focuses more on dynamic, context-aware recall during conversations.

In both cases each memory record always have a single channel as a source. And by default have at least one channel as a target - same as a source. But it can be extended to multiple target channels based on the relationships defined in the system and on a contents of the memory record.

Defining Relationships

One of the biggest challenges is how to define and manage relationships between channels. For example, if Alice and Bob are colleagues, the AI agent should understand this relationship and use it to determine what information is appropriate to share.

There are two main strategies for this as well:

-

Predefined Relationship Structures: Relationships between channels can be explicitly defined and provided as part of the system’s configuration. This could be done using a graph database or a simple JSON structure. These relationships can be exposed to the AI agent via an MCP server, allowing the agent to request and query them as needed.

-

Dynamic Relationship Learning: The AI agent can infer relationships over time by analyzing the content and patterns of conversations. Initially, when memory is empty, the agent may treat all channels as equally trustworthy — similar to how humans or animals form early attachments (e.g., the first object a duckling sees becomes “mom”). As the agent gains experience, it can gradually build a nuanced understanding of inter-channel relationships and use that to inform future behavior.

One More Example: Smart Home Integration

Imagine an AI assistant integrated with a smart home system. There’s a family of two — Alice and Bob — living together in a connected home. The AI assistant, named "Charly," can access various sensors and devices throughout the house, such as thermostats, lights, and security cameras, via MCP servers. Communication with smart home components is bidirectional, meaning devices can notify Charly about changes or events.

Here’s how such a scenario might play out:

- Bob comes home and asks Charly to set the thermostat to 20°C.

- Charly adjusts the thermostat accordingly.

- Later, Alice comes home and says, “It’s hot. What’s the temperature outside today?”

- Charly checks a weather API and replies, “It’s 25 degrees outside.”

- Alice manually changes the thermostat to 18°C.

- Charly receives a notification from the thermostat about the manual change.

- Later, Bob returns and says, “It’s cold. What’s the temperature inside? I asked you to set it to 20.”

- Charly responds, “The temperature is currently 18 degrees. Alice changed it after you left.”

This scenario demonstrates how common memory allows Charly to maintain contextual awareness across users and devices. Charly operates with three communication channels:

- Bob – a voice input/output channel.

- Alice – a separate voice input/output channel.

- Thermostat – an MCP-integrated device channel that reports state changes.

Charly's memory system stores events from these channels, associates them with the relevant actors, and infers cause-effect relationships. When Bob questions the unexpected thermostat setting, Charly recalls not only the device’s notification but also Alice’s earlier remark about the temperature being too hot. The memory system links these facts by time and context to construct a meaningful explanation.

Crucially, Charly also applies contextual filtering — ensuring that information shared across users respects their privacy and relationships. This kind of behavior is what turns an AI assistant from a simple command executor into a helpful, socially aware digital companion.

Next Steps

I plan to experiment with this idea in my AI agent framework, CleverChatty. Fortunately, the core framework won’t need any changes — I’ll simply implement a new memory system that supports common memory.

CleverChatty already supports memory over both MCP and A2A protocols, so integrating a new memory module with common memory capabilities is straightforward.

I’ll share my progress and results in future posts.

Conclusion

Common memory offers a compelling way to make AI agents more context-aware, socially intelligent, and genuinely helpful across conversations with multiple users and systems. By enabling an agent to retain and reason over shared experiences — while respecting boundaries and relationships — we open the door to more natural and effective interactions.

Of course, building such a memory system introduces complex challenges: from relationship modeling and context filtering to trust management and privacy. But these challenges are worth tackling if we want AI agents to move beyond isolated interactions and become truly collaborative partners.

I’m excited to explore these ideas further within the CleverChatty framework and see how far this concept can go in practice. If you're working on similar problems or have thoughts on improving the approach — I’d love to hear from you.