I'm excited to introduce a new package for Go developers: CleverChatty.

CleverChatty implements the core functionality of an AI chat system. It encapsulates the essential business logic required for building AI-powered assistants or chatbots — all while remaining independent of any specific user interface (UI).

In short, CleverChatty is a fully working AI chat backend — just without a graphical UI. It supports many popular LLM providers, including OpenAI, Claude, Ollama, and others. It also integrates with external tools using the Model Context Protocol (MCP).

Why Another AI Chat Tool?

Over the past year, I've conducted extensive experiments with AI chat systems and assistants. Inspired by the opportunities provided by MCP, I wanted to build my own tools. However, I quickly found that most existing AI chats weren't ideal MCP clients — particularly when it came to support for Server-Sent Events (SSE) transport. This made it difficult to properly test and verify my ideas for MCP servers.

Eventually, I discovered MCPHost, which came close but still lacked full MCP support with SSE at that time. I was able to modify it to add SSE support, but another challenge remained:

MCPHost tightly coupled chat logic with a command-line interface, making it cumbersome for me to extend or experiment further.

That's when I decided to create CleverChatty — a clean, modular AI chat engine with no built-in UI, based on ideas from MCPHost but designed for much greater flexibility.

Need a UI? Use CleverChatty CLI

Along with the package, I’ve also released CleverChatty CLI, a lightweight command-line client.

It allows you to start an AI chat directly in your terminal, with full support for MCP and external tools — all powered by the CleverChatty core.

It’s simple to install and works on any major platform.

Roadmap for CleverChatty

Here are some of the next features and improvements I plan to add:

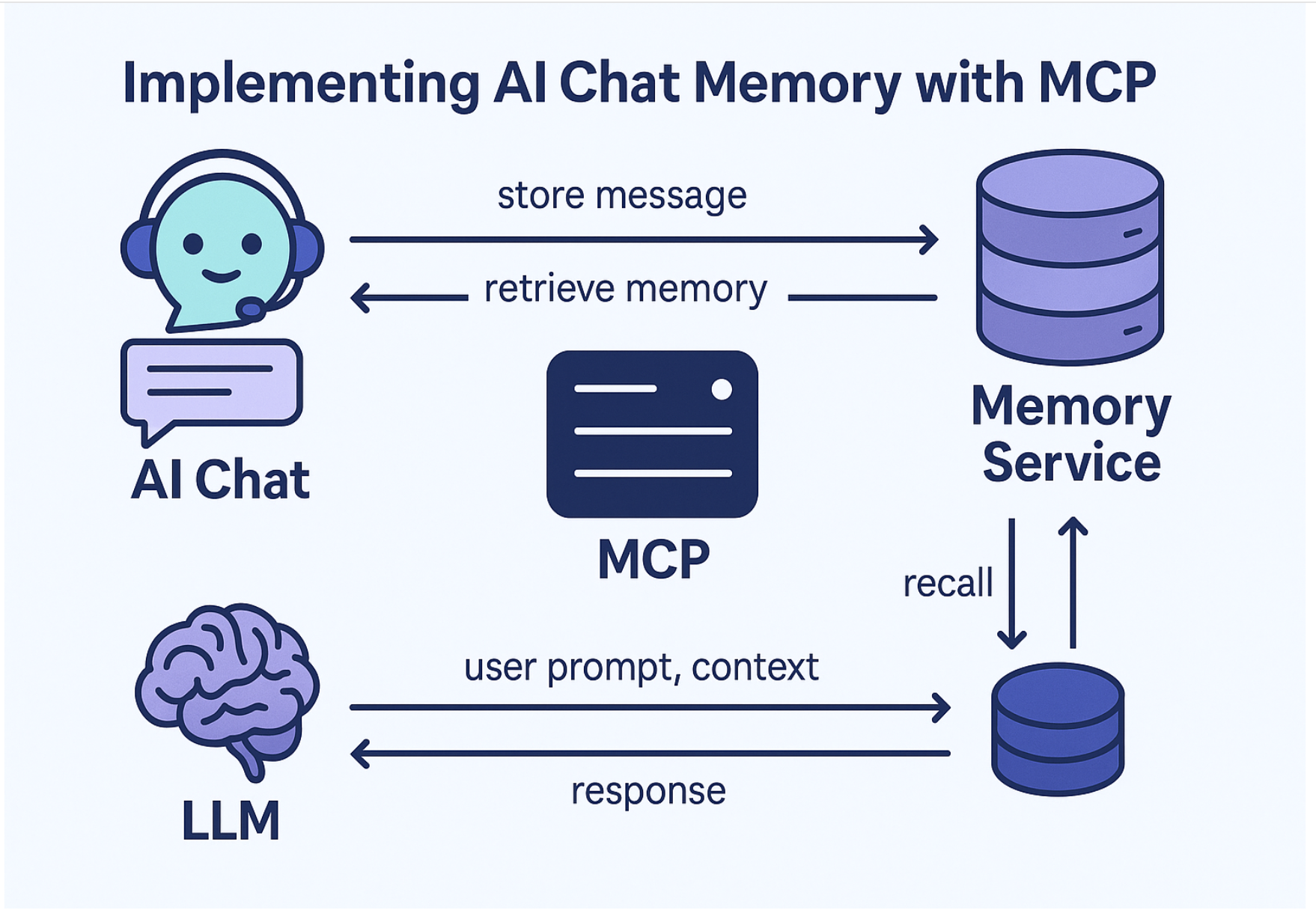

1. AI Assistant Memory via MCP

I want AI chats to have persistent memory — not just session-based memory but long-term knowledge that can grow over time.

In my recent blog post, I describe how MCP can be used to implement this, allowing an independent memory service to plug into an AI chat through a standardized MCP server.

I'm working toward a working concept where AI memory is external, modular, and vendor-agnostic.

2. Full Support for the Updated MCP Specification

The MCP protocol has recently evolved, and I plan to:

- Implement all new features from the latest MCP spec.

- Add support for HTTP Streaming transport.

- Integrate OAuth2 authentication for accessing protected MCP servers.

3. A2A Protocol Support

The A2A protocol is a new standard focused on connecting AI assistants more efficiently.

I aim to implement A2A support alongside MCP, offering greater flexibility when integrating external services and building more powerful assistants.

Ultimately, my goal is for CleverChatty to become a full-featured, extensible AI chat system that’s easy to embed in any project.

Toward a Nicer GUI

While CleverChatty currently focuses on the backend, I'd love to see a more user-friendly graphical interface built around it in the future.

As I'm not a front-end developer myself, I welcome collaboration — if you're a frontend or desktop app developer interested in contributing, feel free to reach out!

Quick Usage Example

Here's a simple Go example showing how to use CleverChatty:

package main

import (

"context"

"fmt"

"os"

"github.com/gelembjuk/cleverchatty"

)

const configFile = "config.json"

func main() {

config, err := cleverchatty.LoadMCPConfig(configFile)

if err != nil {

fmt.Printf("Error loading config: %v\n", err)

os.Exit(1)

}

cleverChattyObject, err := cleverchatty.GetCleverChatty(config, context.Background())

if err != nil {

fmt.Printf("Error creating assistant: %v\n", err)

os.Exit(1)

}

defer cleverChattyObject.Finish()

response, err := cleverChattyObject.Prompt("What is the weather like outside today?")

if err != nil {

fmt.Printf("Error getting response: %v\n", err)

os.Exit(1)

}

fmt.Println("Response:", response)

}

Example config.json:

{

"log_file_path": "",

"model": "ollama:mistral-nemo",

"mcpServers": {

"weather_server": {

"url": "http://weather-service/mcp",

"headers": []

},

"get_location_server": {

"command": "get_location",

"args": ["--location"]

}

},

"anthropic": {

"apikey": "sk-**************AA",

"base_url": "https://api.anthropic.com/v1",

"default_model": "claude-2"

},

"openai": {

"apikey": "sk-********0A",

"base_url": "https://api.openai.com/v1",

"default_model": "gpt-3.5-turbo"

},

"google": {

"apikey": "AI***************z4",

"default_model": "google-bert"

}

}

This minimal example demonstrates how to:

- Load configuration.

- Create a CleverChatty instance.

- Send a prompt and receive a response.

The setup uses two MCP servers — one local STDIO server and one remote SSE server — to enhance the AI’s responses.

Final Thoughts

I hope you find CleverChatty useful!

I'm actively working on new features and improvements. Feedback, ideas, and contributions are very welcome — feel free to open issues or contact me directly.

Thanks for checking it out!