In this post, I’ll walk through how to integrate the Mem0 memory model with CleverChatty-CLI, a command-line framework for building AI assistants.

Spoiler: It turned out to be a lot easier than I expected.

Quick Overview of the Projects

Before we dive into the integration, here’s a quick recap of the two key components involved:

-

Mem0 “Mem0” (pronounced mem-zero) adds an intelligent memory layer to AI assistants and agents. It enables personalized experiences by remembering user preferences, adapting to their needs, and continuously learning over time. It’s particularly useful for customer support bots, personal assistants, and autonomous agents.

-

CleverChatty-CLI A command-line interface for interacting with LLM-based chat systems. It supports MCP (Model Context Protocol), RAG (Retrieval-Augmented Generation), and I plan to add support for A2A (Agent-to-Agent) communication soon. The CLI is built for experimentation, testing, and prototyping AI interactions.

Previously, I added memory support to CleverChatty-CLI, which I covered in these posts:

The idea was to define a simple memory interface via MCP, so memory backends could be swapped easily. Now, Mem0 gives us a real-world way to test that out.

The Mem0 team did a great job creating a simple API for their memory backend, along with a web dashboard for managing memories. This makes integration with various AI assistants — including CleverChatty-CLI — straightforward.

Previously, I experimented with my own memory backend, cleverchatty-memory, which was still in a prototype stage. Now, with Mem0, I can use a production-ready memory backend for CleverChatty-CLI.

The AI Memory Interface (via MCP)

The memory interface I proposed is minimal and straightforward — just two tools:

remember

A tool that accepts:

role: e.g.,"user"or"assistant"contents: the text to be stored in memory. The service decides what to do with it - store everything or extract key facts.

recall

A tool that accepts:

query: a search string to retrieve relevant memory. If omitted, it returns default or general memories.

Note. The AI assistant should not use this server as a regular MCP server. It will always call the recall tool after user's prompt before sending it to the LLM. And it will call the remember tool after the LLM's response to store the memory. In this blog post, I will focus only on this approach to memory integration. However, it still can be used as a regular MCP server, allowing LLM to decide when to use memory.

Implementing the Interface with Mem0

To integrate Mem0 with CleverChatty, I will use a small MCP server. It works just like an adapter, nothing more. Of course, it is possible to add Mem0 API client directly in the AI assistant (I could modify CleverChatty for this). But this is the core idea of using MCP and defined interface. It allows us to swap memory backends without changing the main AI assistant code.

Here’s a basic MCP server using Mem0 as the memory backend:

from mcp.server.fastmcp import FastMCP

from mem0 import MemoryClient

import os

mem0_api_key = os.environ.get("MEM0_API_KEY","")

mem0_user_id = os.environ.get("MEM0_USER_ID","")

client = MemoryClient(api_key=mem0_api_key)

mcp = FastMCP("Memory Server")

def is_configured() -> bool:

"""Check if the server is configured with a valid API key"""

return bool(mem0_api_key) and bool(mem0_user_id)

@mcp.tool()

def remember(role: str, contents) -> str:

"""Remembers new data in the memory"""

if not is_configured():

return "ERROR: Server is not configured with a valid API key."

client.add([

{"role": role, "content": contents},

], user_id=mem0_user_id)

return "ok"

@mcp.tool()

def recall(query: str = "") -> str:

"""Recall the memory"""

if not is_configured():

return "ERROR: Server is not configured with a valid API key."

r = client.search(query, user_id=mem0_user_id)

if not r:

return "none"

r = "\n".join("Memory: "+item.get("memory", "") for item in r)

return r

if __name__ == "__main__":

mcp.run(transport="stdio")

This MCP server correctly implements the remember and recall tools and uses the Mem0 API client to interact with the memory backend. It checks for a valid API key and user ID before performing any operations.

It is expected that credentials for Mem0 are provided via environment variables MEM0_API_KEY and MEM0_USER_ID. The server uses STDIO transport, so credentials are passed through the environment.

Running the Server with CleverChatty-CLI

I use uv to run the MCP memory server. Here’s how to configure CleverChatty-CLI to use it:

1. JSON Config for CleverChatty

In your test folder, create a config file for CleverChatty-CLI. For example:

{

"log_file_path": "",

"debug_mode": false,

"model": "ollama:qwen2.5:3b",

"system_instruction": "",

"mcpServers": {

"Memory_Server": {

"command": "uv",

"args": [

"run",

"--directory",

"/...path_to_my_test_folder../test/mem0-mcp",

"main.py"

],

"env": {

"MEM0_API_KEY": "m0-GC**********ng",

"MEM0_USER_ID": "roman"

},

"interface": "memory"

}

}

}

2. Preparing the MCP Server

Assuming your base test folder is /...path_to_my_test_folder../test/, here are the steps to set up the server:

cd /...path_to_my_test_folder../test/

uv init mem0-mcp

cd mem0-mcp

uv venv

source .venv/bin/activate

uv add "mcp[cli]"

uv add "mem0ai"

deactivate

cd ../

Then, replace mem0-mcp/main.py with the Python code shown earlier.

The config is stored in /...path_to_my_test_folder../test/config.json.

Running the AI Chat

Prerequisite - you must have go installed and configured on your system.

If you do not have the CleverChatty-CLI installed, you can install it with:

go install github.com/gelembjuk/cleverchatty-cli@latest

cleverchatty-cli --config /...path_to_my_test_folder../test/config.json

Or, if you do not want to install it, you can run it directly from the source:

go run github.com/gelembjuk/cleverchatty-cli --config /...path_to_my_test_folder../test/config.json

Testing the Integration

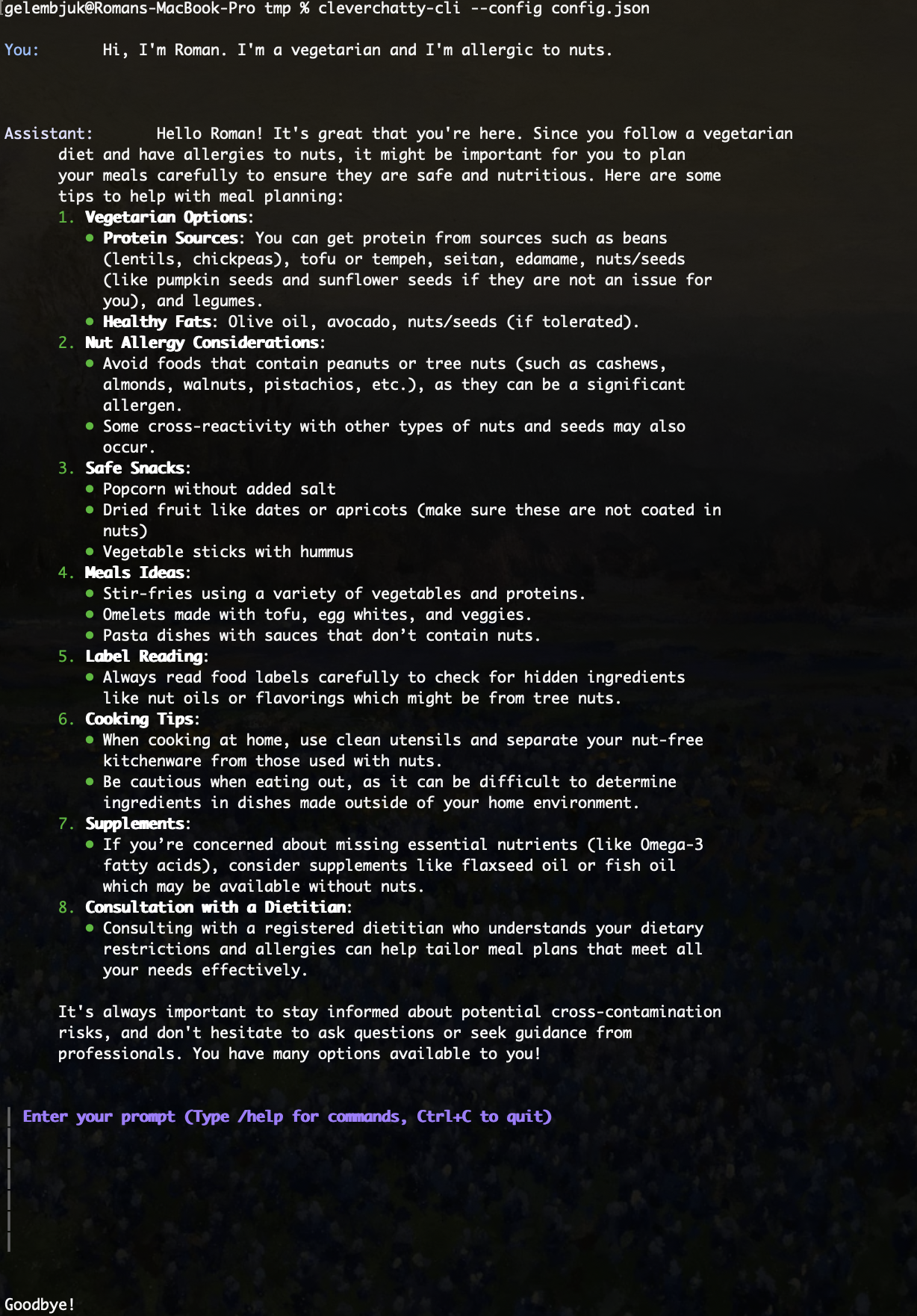

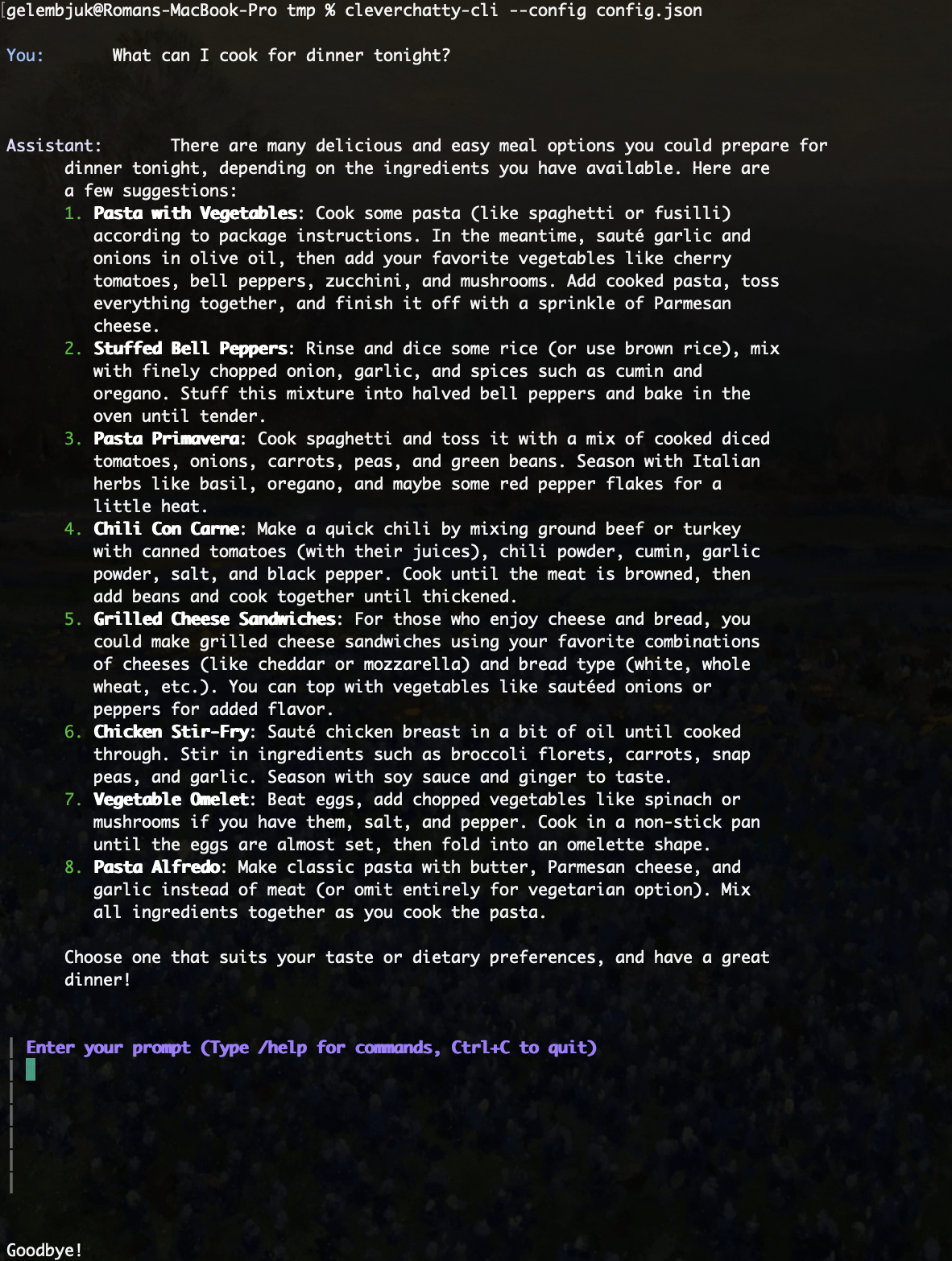

Here’s what I got when I ran the AI chat with the Mem0 memory backend:

First Interaction with empty memory

I posted a message in the chat and the AI assistant responded. After this I looked at the memory dashboard and I can see new memory records arrived!

Second Interaction with some memory

I have stopped the chat and started again to ensure there is no history from the first chat (this tool keeps the history of messages during a single chat session). I have sent new message and the AI assistant responded again. There was some delay before the chat went to "Thinking..." state while the assistant communicated with Mem0 server.

From the response, we can see that the AI assistant remembered that I’m vegetarian. 😊

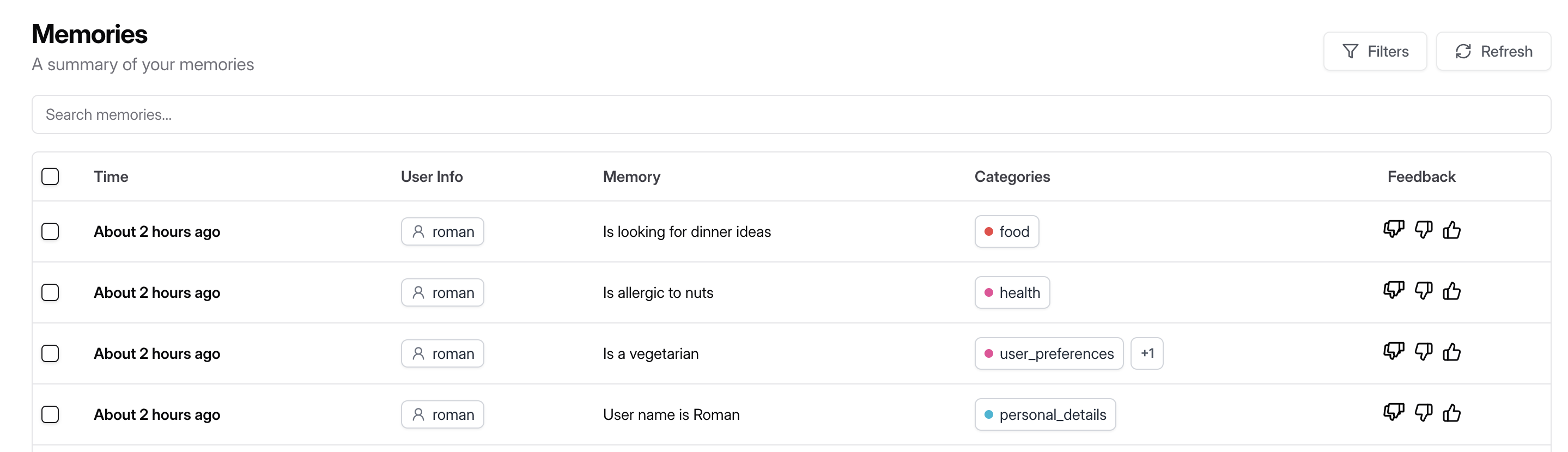

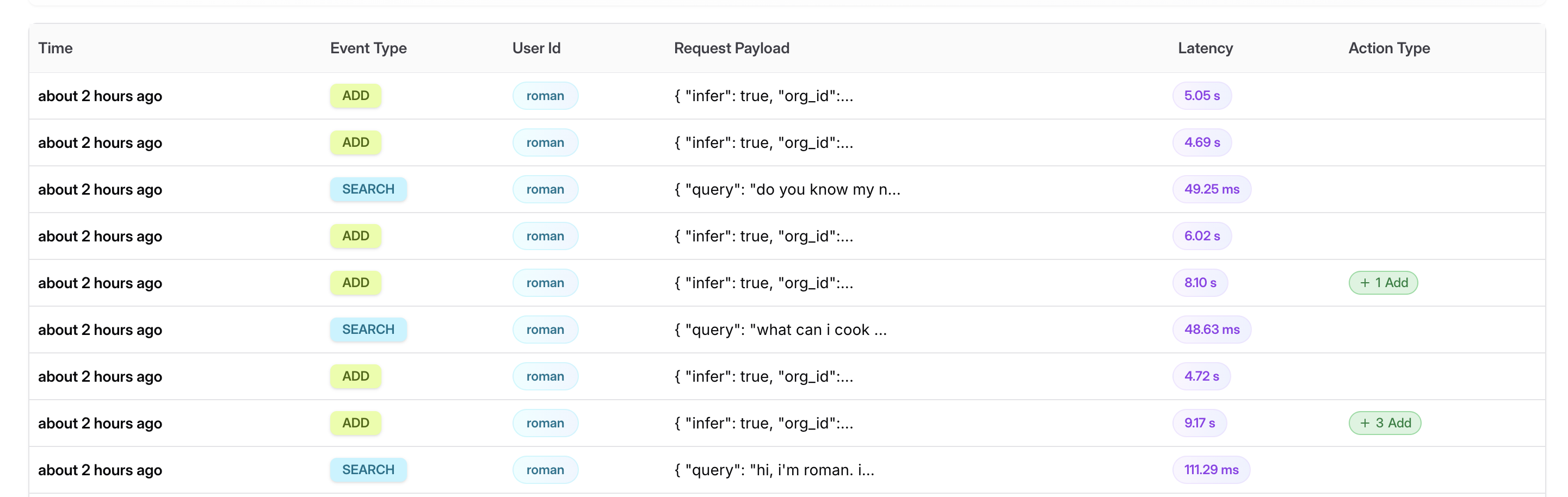

What's in the Memory?

To check what is in the memory, i have used the Mem0 dashboard.

I can see all memories and also the history of requests.

Final Thoughts

This integration proved how flexible the MCP-based architecture is. Thanks to the clean memory interface, swapping in Mem0 as the backend was straightforward—no changes to the main CLI code were needed.

This is just the beginning — next steps could include experimenting with Mem0's more advanced features like memory tagging or relevance scoring, and eventually connecting multiple agents using shared memory.