In this post, I’m excited to announce a new version of CleverChatty that introduces server mode — unlocking powerful new capabilities for building AI assistants and agents that can interact over the network.

Previously, CleverChatty functioned only as a command-line interface (CLI) for interacting with LLM-based assistants. A typical use case involved a single user chatting with an AI model via the terminal. With this latest update, CleverChatty can now run as a server, enabling:

- Concurrent communication with multiple clients

- Background operation on local or cloud environments

- Integration into distributed agent systems

But that’s not all. The biggest leap forward? Full support for A2A (Agent-to-Agent) protocol.

🌐 A2A Communication: A Two-Way Upgrade

The new A2A support brings two major enhancements:

-

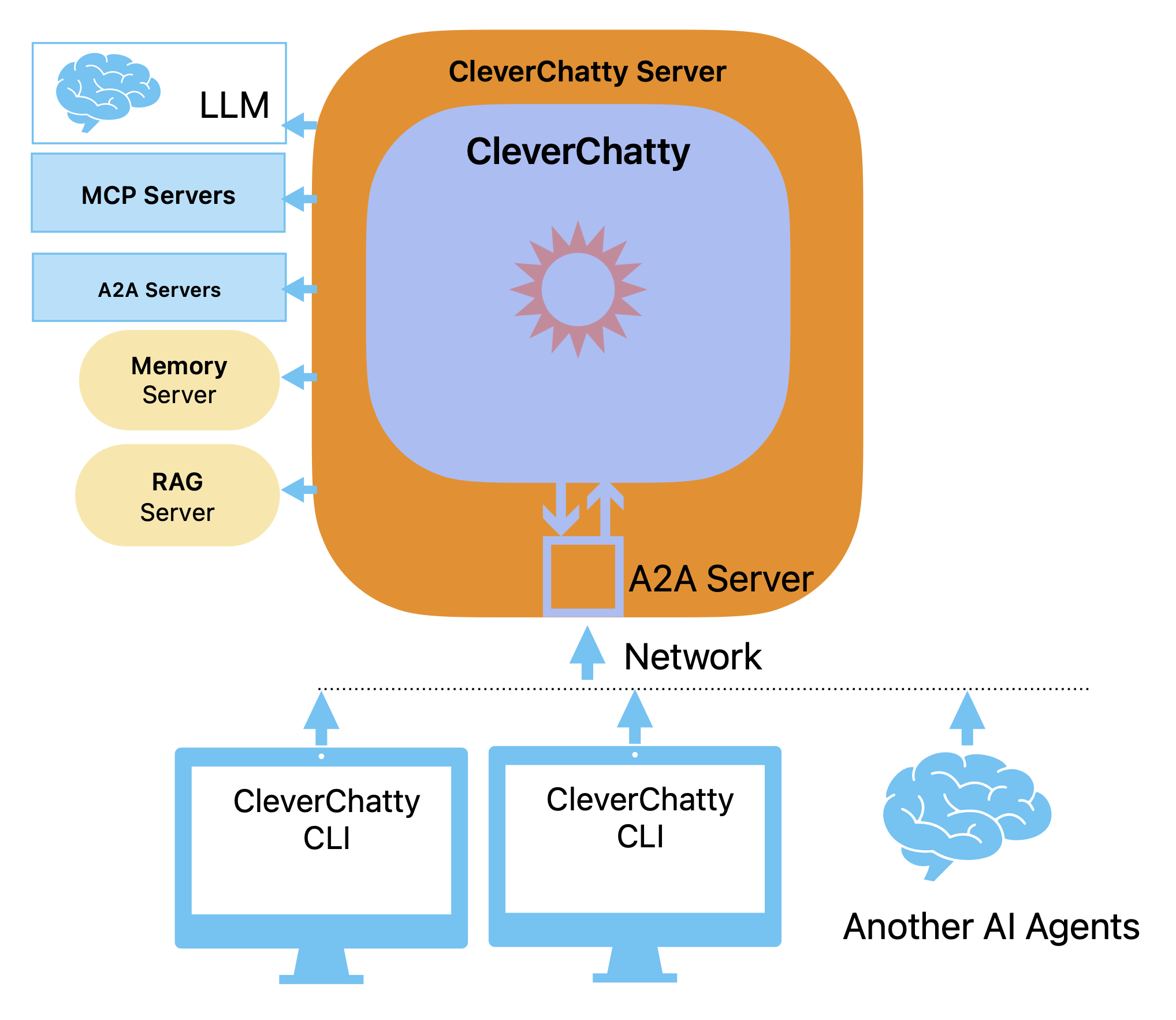

CleverChatty as an A2A Server It can now act as an A2A endpoint, accepting requests from other agents or clients — such as a CleverChatty-powered CLI tool.

-

CleverChatty as an A2A Client It can also send A2A requests to other agents. This is implemented similarly to how it uses MCP tools — meaning you can now combine both MCP and A2A tools in your AI workflows.

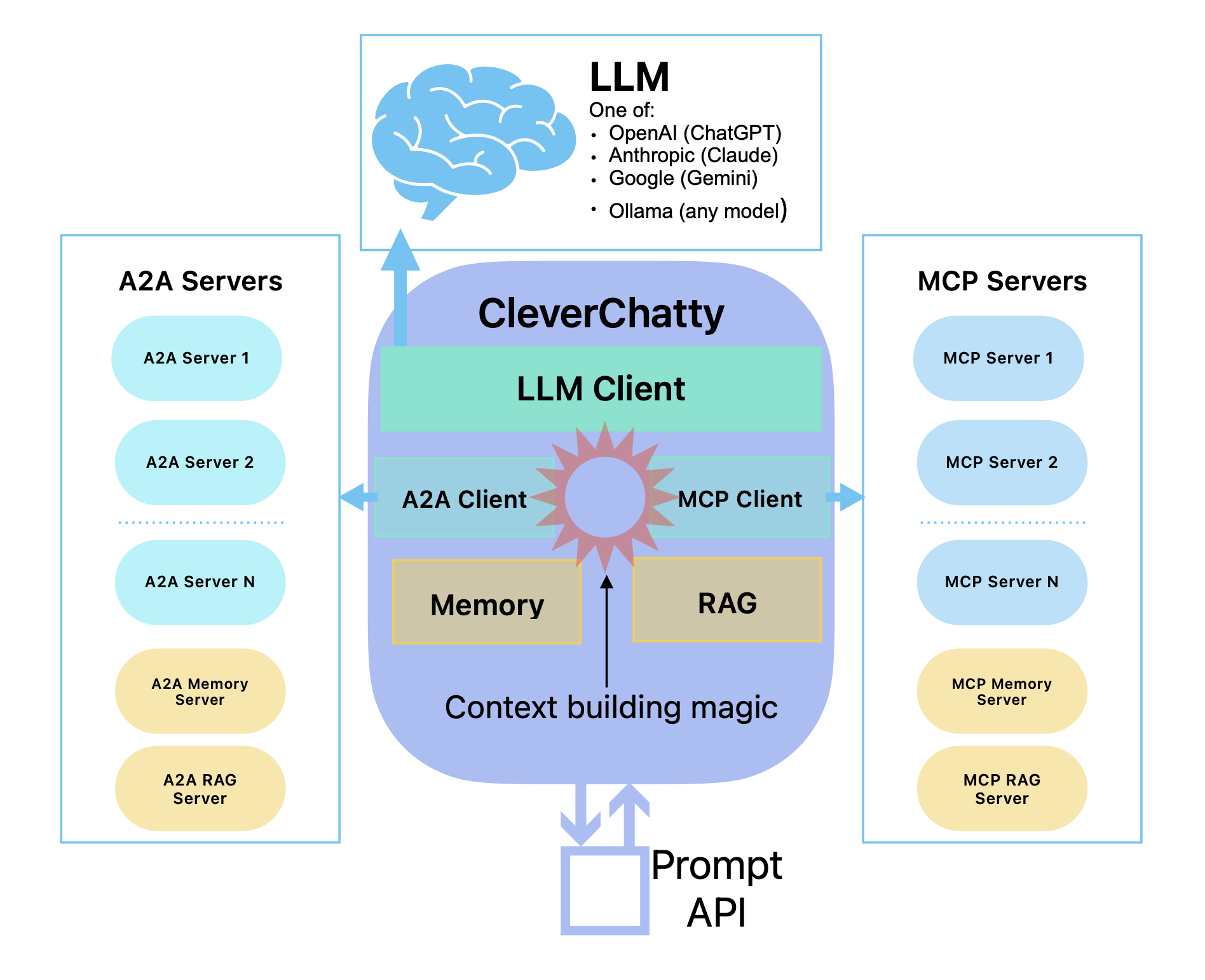

This enables CleverChatty to be a hub of intelligent interactions between multiple AI services, memory providers, and knowledge sources.

✨ Key Features

-

LLM Prompt Handling: Communicate with supported large language models via prompts and receive responses.

-

MCP Server Support:

- Seamlessly integrates MCP (Model Context Protocol) servers:

STDIO,HTTP, andSSE.

- Seamlessly integrates MCP (Model Context Protocol) servers:

-

A2A Protocol Support:

- Acts as both A2A server and client

- A2A servers can be used like tools, just like MCP tools

-

AI Memory Integration:

- Compatible with memory systems such as Mem0, or any service that speaks MCP or A2A.

-

RAG (Retrieval-Augmented Generation):

- Bring in external data sources or knowledge bases to enrich AI responses dynamically.

🖥️ CleverChatty Server

The CleverChatty server is a long-running background service that handles requests via the A2A protocol. It manages your AI models, tools, and memory systems from a centralized interface.

To start the server:

cleverchatty-server start --directory "../agent-filesystem"

The --directory option specifies the working directory for logs, config files, and STDIO-based MCP tools.

To stop the server:

cleverchatty-server stop --directory "../agent-filesystem"

This makes it easy to manage your server setup both locally and on cloud instances.

🧪 CleverChatty CLI (Standalone Mode)

You can still run CleverChatty CLI as a standalone app — ideal for prototyping, quick tests, or single-session experiments. It includes all the same features as the server but operates in a self-contained process.

Learn how to configure and run the CLI in the CleverChatty CLI README

Stay tuned for more updates — and start building your own multi-agent AI assistant infrastructure today with CleverChatty Server Mode + A2A support! 🚀