Model Context Protocol (MCP) has become a hot topic in discussions around AI and large language models (LLMs). It was introduced to create a standardized way of connecting “external tools” to LLMs, making them more capable and useful.

A classic example is the “What’s the weather in…” tool. Previously, each AI chat app had its own custom way of handling that. Now, with MCP, a plugin or integration built for one AI system can work with others too.

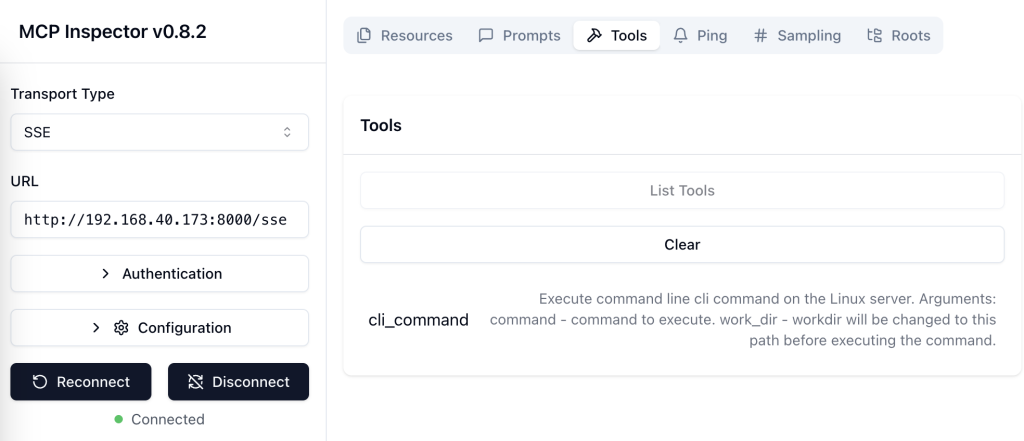

Recently, we’ve seen a surge of enthusiasm in building MCP servers for all kinds of services, and this trend is only growing—especially with the use of Server-Sent Events (SSE) as the transport layer. Implementing an MCP server with SSE makes it feel a lot like a SaaS backend designed to serve an LLM or AI chatbot client.

There are two main reasons I decided to write this article:

- First, it’s widely reported that users are now turning to AI chat apps—especially ChatGPT—instead of Google to look things up.

- Second, OpenAI has announced upcoming support for MCP in the ChatGPT Desktop app. They will support both STDIO and SSE transport protocols.

Taken together, this points to some interesting changes on the horizon.

Real-World Use Cases We’ll See Soon

Sell With ChatGPT (Or Any AI Chat App)

Imagine you own a small flower shop and sell bouquets through your website. A customer visits, selects a bouquet, and places an order.

Now imagine there’s a button on your site: “Add this store to ChatGPT.” When clicked, your MCP endpoint is submitted to ChatGPT’s “Add External Tools” screen, and the user approves it.

Then, next time the user opens ChatGPT, the interaction could look like this:

User: I want to send flowers to my grandma.

ChatGPT: The store "Blooming Petals" has some great offers today. I recommend one of these three bouquets: ...

Would you like to pick one?

User: Option 2 looks good. Send it to my grandma’s address.

ChatGPT: Would you like to include a message on the card?

User: Yes. You decide.

And just like that, the order is placed.

What’s happening in the background:

The flower shop has an MCP server with SSE transport at an endpoint like https://flowershop.com/mcp. This server wraps around existing site functionality: search, browse, add to cart, place order, etc.

Once the user connects this endpoint to ChatGPT, it becomes a persistent tool in the user’s chat environment. Anytime a relevant topic comes up—like sending flowers—ChatGPT knows it can use your MCP server to help fulfill the request.

Expose Your Data to AI Chat

Here’s another example: imagine you run an online community or forum. Every day, new discussions start and members interact. But now, one of your regular users spends more time in ChatGPT than browsing your site.

You can add a button: “Add this forum to ChatGPT.” Once they click and confirm, their chat experience becomes much richer:

User: What’s new?

ChatGPT: There are 5 new discussions in "TechTalk Forum" and one private message.

User: What’s the message?

ChatGPT: It’s from @alex89. They wrote: “Are you still selling your laptop?”

User: Tell them I’m not interested anymore.

What’s happening in the background:

When the user says “What’s new?”, ChatGPT checks all connected MCP tools. It finds that your forum supports the get_news and get_messages endpoints, uses them, interprets the response, and replies.

In this case, your MCP server might expose functions like:

get_newsget_internal_messagepost_internal_message

With just a bit of development work, any site can integrate directly into ChatGPT (or any MCP-supporting AI chat app), creating an entirely new user experience.

Voice + LLM + MCP = Magic

Now imagine combining this with speech recognition. Some ChatGPT apps already support voice conversations. Add in a few trusted MCP servers and suddenly the experience feels like science fiction:

“Book me a doctor’s appointment next week, and tell my boss I’ll need to leave work early.”

And it just… happens.

This user has a list of integrated services each with the MCP protocol. Email, Messanger,Calendar. But also preferred Medical company web site, autoservice company web site etc.

MCP Might Soon Be a “Must-Have” for Websites

Just like RSS feeds were once standard, or how websites now include “Follow us on social media” buttons, soon we may see buttons like:

“Add us to your AI chat.”

It might become common for users to manage a list of “connected MCP tools” inside their AI chat settings—just like browser extensions today.

For businesses, adding MCP support could mean a new source of traffic, leads, or orders. It’s a lightweight way to get integrated into where users spend their time.

A Possible Side Effect: The Next Web Evolution?

If many sites adopt MCP, we could be entering a new phase of the web—perhaps something like Web 3.0 (in the original, data-centric sense).

In this new model, websites expose services and data in a standard way, optimized for AI systems. Users may no longer interact with visual interfaces—they’ll interact via chat, text, or voice, powered by LLMs.

Businesses might no longer need to invest heavily in traditional UI/UX, because the chat becomes the interface.

Final Thoughts

The rise of MCP opens up a new frontier for internet usage. AI chat tools aren’t just about answering questions anymore—they’re becoming gateways to services, commerce, and everyday tasks.

If you’re building a web service or product, adding an MCP interface might soon be as important as having a mobile-friendly design or social media presence.

The age of AI-integrated web services is just beginning. Now is the time to prepare.