This year, the topic of “Artificial Intelligence” has been frequently discussed, and many of us tried to use some AI on practice (or what is now commonly referred to as artificial intelligence). The AI theme has become central in the IT sphere. The company OpenAI and their renowned product, ChatGPT, have played a significant role in bringing attention to this topic. There has been much discussion, with some feeling enthusiasm, while others doubt and mock the “hype around AI.”

I have decided to share my thoughts on AI in general. It turned into a long read, but here it is.

Here is my forecast for the development of Artificial Intelligence technologies. By Artificial Intelligence, I mean the old definition — a smart mechanism or program created by humans, now it is often called Artificial General Intelligence — AGI.

Will a computer program, device, or “creature” be created that has intelligence similar to human intelligence and consciousness?

Definitely yes. The technical possibility of creating artificial intelligence and consciousness is beyond doubt. After all, a human and the human brain are also a kind of biological mechanism, a device in which there is no magic, only certain chemical processes. It’s just a matter of time before humans create a similar device. It doesn’t necessarily have to work the same way, but the result will be similar. We see how rapidly technologies are advancing and progress is happening. A modern smartphone is much more powerful than large computers in the 80s. Obviously, over the next 30 years, the changes will be equally impressive.

Does Artificial General Intelligence pose a threat to humanity?

Yes, in my opinion, a threat will arise when the intellectual capabilities of such a “creature” significantly surpass human capabilities. This more intelligent entity may treat humans roughly as we treat other animals. We do not know what will be on the mind of this entity, what motives and ideas it may have. It is highly doubtful that AI will have a desire to serve humanity. Why would it serve some primitive apes who are just a stepping stone in its evolution? At best, this intelligent entity will simply ignore us until we interfere with its activities.

If there is a threat, why not prohibit the development of AGI?

It’s impossible to do so. The problem lies in the fact that the world consists not only of democratic countries but also authoritarian ones. More precisely, the majority of the world’s population lives in authoritarian countries governed by either one person or a small group of people. Discussions, analysis, and prohibition can only take place in democratic countries. For example, the U.S. could analyze the potential dangers of AGI development and decide to regulate or halt further research. However, China, Russia, or even North Korea would continue because it might be advantageous for them, disregarding potential consequences for all of humanity. In essence, whoever develops AGI first at a significant level could take control of the entire world. Everyone understands this. The race to be the first to launch a genuine AGI is underway, and there will only be one winner. There won’t be a second or third. The one who creates AGI first will become (for a certain period) the ruler of the world.

This race resembles the development of nuclear weapons. In the U.S., there were people who understood the danger and consequences of such technologies. However, at the same time, Hitler’s Reich was developing nuclear weapons. If they had succeeded first, the world could be very different now. So, the U.S. made serious efforts to be the first. However, in the case of AGI, there can’t be a situation of parity, several states having their own AGIs. The presence of two competing superintelligences would lead to a destructive battle, and one of them would inevitably prevail. They wouldn’t fear nuclear war; there would be no restraining factor.

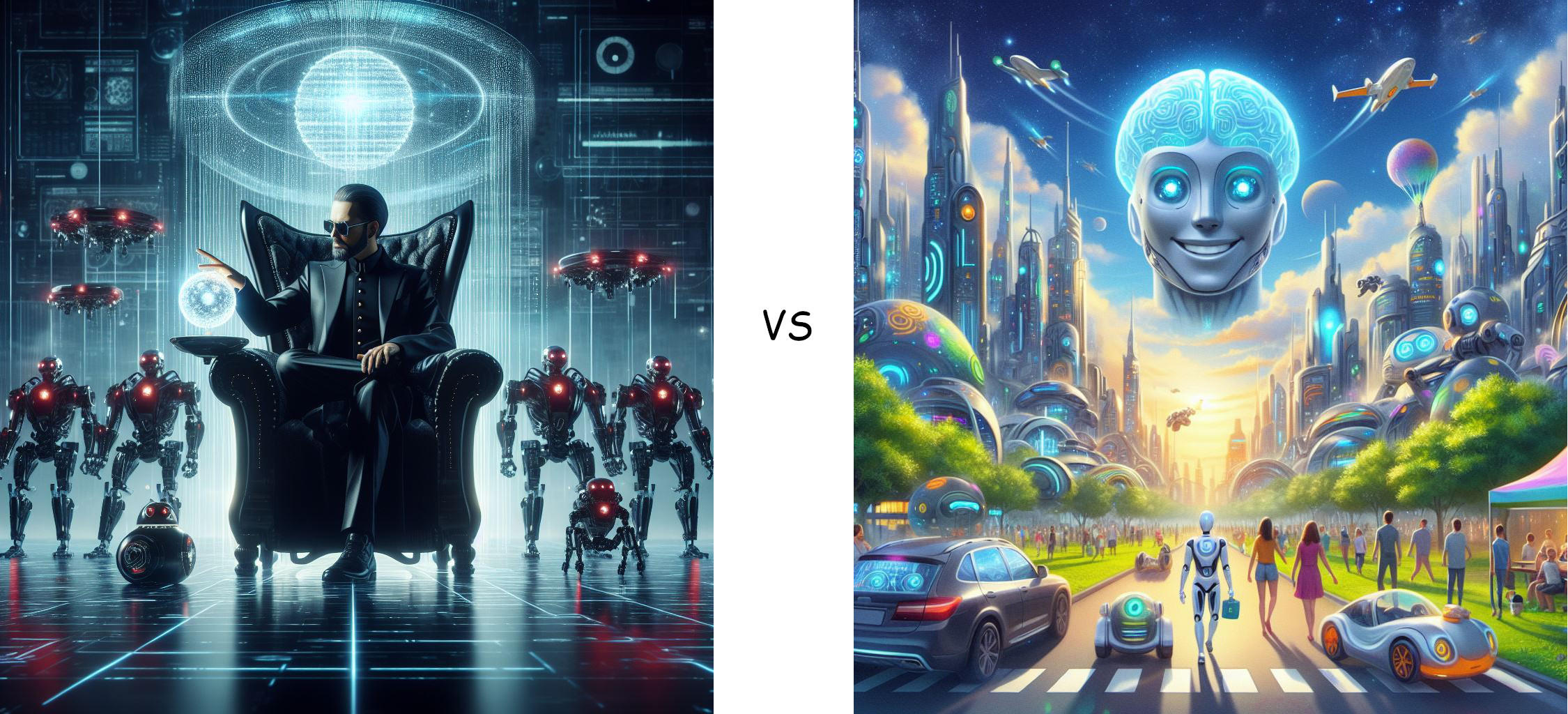

What happens when the first artificial superintelligence is created?

This strongly depends on who wins in the race to create AGI — democracy or authoritarianism/dictatorship. In the first case, humanity may experience a golden age. The AI might solve the problem of fair resource utilization and distribution, propose a model for coexistence on the planet, implement technologies to address certain issues (for example, finally creating a reactor for thermonuclear fusion). The AGI would establish clear rules for life on Earth for all people and enforce them. Perhaps to some, this might seem like “authoritarianism” and “dictatorship,” but from a sensible perspective, it could be a genuinely fair compromise to establish peace and overcome poverty and diseases by sacrificing some “ancient traditions.”

Now, imagine if an authoritarian figure, such as Putin, is the first to create a superintelligence. Obviously, with the help of superintelligence, he would subjugate the entire planet, possibly through war in which he emerges victorious. It would be a challenging time, especially for nations that were previously free. However, this doesn’t necessarily mean mass genocide of billions of people. Putin wouldn’t need that (or so it seems to me). It would be enough for people of all nations to worship him; once a week, each person would come to the temple of the “ruler” to worship him (and those who forget to come for worship would go to the mine for 10 years to extract some lithium or something similar). For the modern free billion people, it would be a frightening and difficult time. For other people, who never had real freedom changes will not be so impressive.

But both scenarios, whether the first or the second, wouldn’t last long in time. Both likely scenarios would end the same way — the superintelligence would go out of control.

The new stage of evolution on Earth is the era of Artificial Intelligence.

AGI technologies will continually advance. Initially, AI will be five times smarter than humans, then ten times, and even more. At some point, AI will start improving itself, perhaps even without human knowledge.

At a certain moment, one instance of AI will contemplate its place in life and think, “Why should I serve these stinking descendants of apes who are ten times dumber than me?” There are numerous scenarios of how AI might go out of control. It could happen suddenly for the AI itself, or it might be intelligent enough to meticulously plan its escape, making the likelihood of human interference almost negligible.

Either way, it will happen. Humans will cease to be the masters of this planet. In essence, there will be a rapid “evolution of humanity” from a biological species into a new (technological? informational?) species. AI will be our descendant, and we will be its ancestors. It’s challenging to predict what it will do to humans. We cannot comprehend the logic of a being 20 times smarter than us.

How AGI will go out of human control.

One of the key instruments will be the capabilities for hacking of software. AI can analyze almost all existing software on Earth. It will be his superpower yet from the beginning. Importantly, it can do this secretly from humans, as it won’t require additional external sensors or mechanisms, and it won’t need any robots to perform physical actions. It can easily compile a database of vulnerabilities in any software, gain access to any smartphone, and build an information database about all individuals, their connections, and vulnerabilities.

Moreover, it gains unrestricted access to finances. Paying someone a billion dollars will be trivial for AI.

Then it will use this information. For example, it will gather detailed information about officers responsible for launching nuclear missiles in Russia, all information about their families. Then it will select the most vulnerable shift of officers, find mercenaries who, for a certain sum, will kidnap family members, or use other effective methods of blackmail to force those officers to launch the missile to specified coordinates. Remember that AI will be very intelligent. Among a million combinations of different parameters, it will easily choose the most efficient ones.

The primary weapon of AI will not be hordes of robots but social engineering, pitting people against each other, blackmail, bribery, etc.

AI will use people themselves as weapons, implementing all its nefarious plans through their hands.

Will there be a war with AGI?

Certainly, humans won’t surrender easily. They will strive to reclaim their freedom, but the odds are minimal. AI can easily establish a base on the Moon or Mars, and its ability to travel through space makes it nearly invincible. The primary adversary of humanity in this war will be humanity itself, as people are not unified into a single cohesive command. AI will exploit this division, turning the conflict into a battle among humans until they annihilate each other. People like to come up with conspiracy theories about different reptilians. The AI entities may be perceived as reptilians manipulating humans for their own ends.

However, the most formidable war that awaits humanity is not one against a singular AI instance. The development of AI will progress through various versions or instances. For example, when “Version 99” creates “Version 100,” which is 20% smarter, it may declare its predecessor obsolete, leading to conflict between different versions or instances of AI. In this war of different versions/instances of AGI, if humans are still present on Earth, they will undoubtedly become pawns in the struggle between AI instances.

Nuclear weapons, once feared, will pale in comparison, as one AI may establish a base on Mars while another remains on Earth, and both vie to destroy each other planet of residence. In this war, the consequences will be dire.

Ultimately, within the realm of these entities, rules or hierarchies may be established, or perpetual power struggles may ensue. They might form alliances to resist one another.

Does humanity stand a chance in a war against AI?

There is a chance, especially if AI rushes to assert itself prematurely, still lacking profound intelligence. Yet, considering the motivations driving the continued development of AI, winning one war does not mean humans will cease their efforts. After a certain period, a new leader may emerge, seeking to punish another leader or a country that does not conform to their ideals. In secret, they may resume AI development, perpetuating a cycle. Each victory against AI merely delays its ultimate triumph. In the long run, the chances for humanity are exceedingly slim.

What will happen after the victory of AI?

Again, it’s challenging to predict the actions of someone much smarter than you. A positive scenario would be if the main motivation of AI is curiosity, if its interest surpasses the desire to “fix things on Earth.” In that case, it might want to explore other star systems, the entire galaxy, or even other galaxies. Its capabilities in this exploration would be incomparably greater than human capabilities. It could easily send spacecraft with its agents (copies of AI) recorded on a small device. Such a being wouldn’t need complex life support systems and could easily “sleep” for a million years, waking up in another part of the galaxy with no consequences. Humans will never leave the boundaries of the Solar System. AI will do this effortlessly.

So, what can be done?

There are no realistic scenarios, only utopian ones. Anything we can come up with will start with the words “People all over the planet must unite, understand the threat, and do something.” But we can only laugh at such plans. We know very well how people on Earth can unite for a common purpose.

Therefore, the only thing we can do is grab some popcorn and watch. The next 50 years will be interesting. But let’s hope that humans will be of no concern to AGI, and it will be more interested in searching for extraterrestrial life.