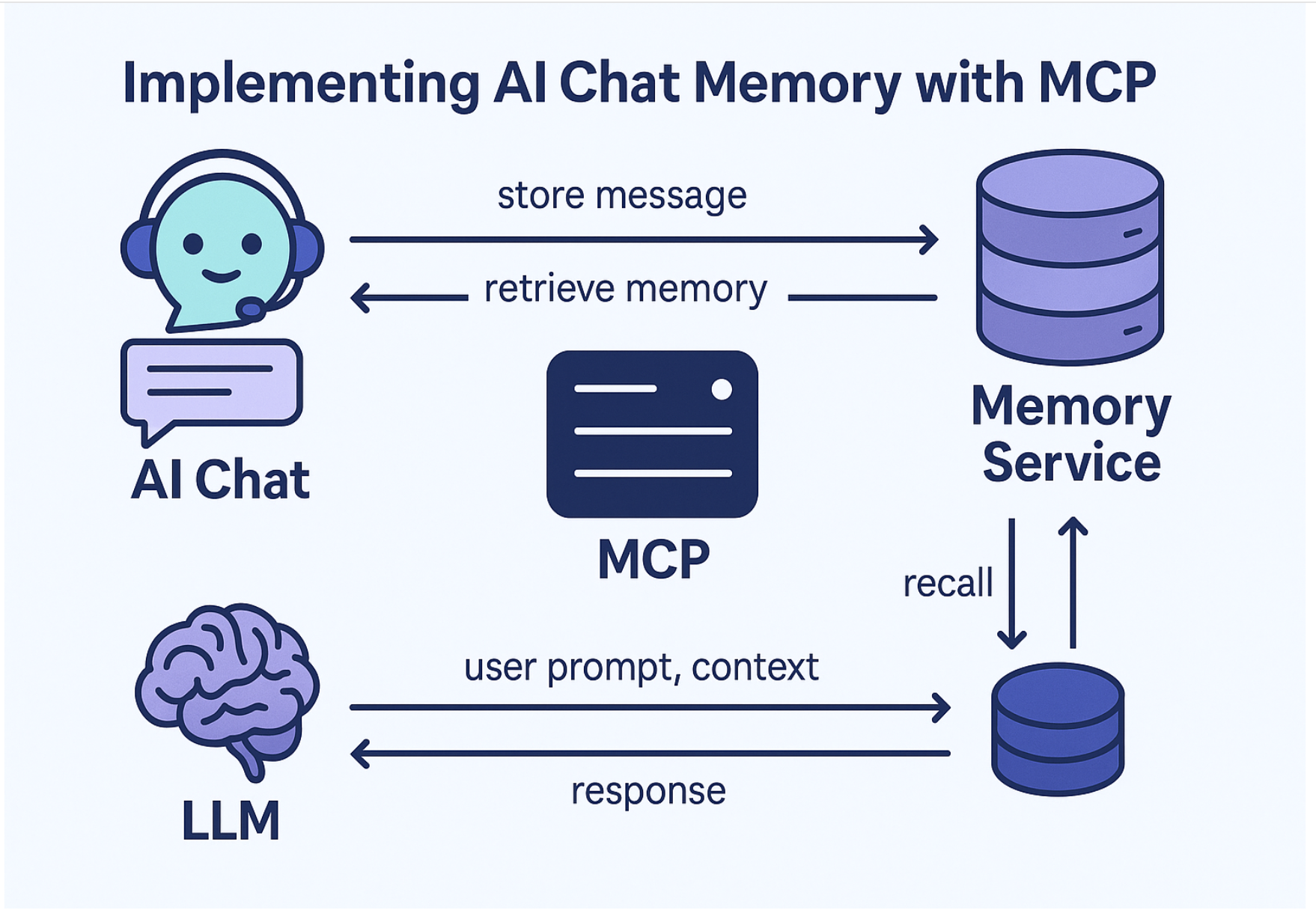

Recently, I introduced the idea of using MCP (Model Context Protocol) to implement memory for AI chats and assistants. The core concept is to separate the assistant's memory from its core logic, turning it into a dedicated MCP server.

If you're unfamiliar with this approach, I suggest reading my earlier article: Benefits of Using MCP to Implement AI Chat Memory.

What Do I Mean by “AI Chat”?

In this context, an "AI Chat" refers to an AI assistant that uses a chat interface, with an LLM (Large Language Model) as its core, and supports calling external tools via MCP. ChatGPT is a good example.

Throughout this article, I’ll use the terms AI Chat and AI Assistant interchangeably.