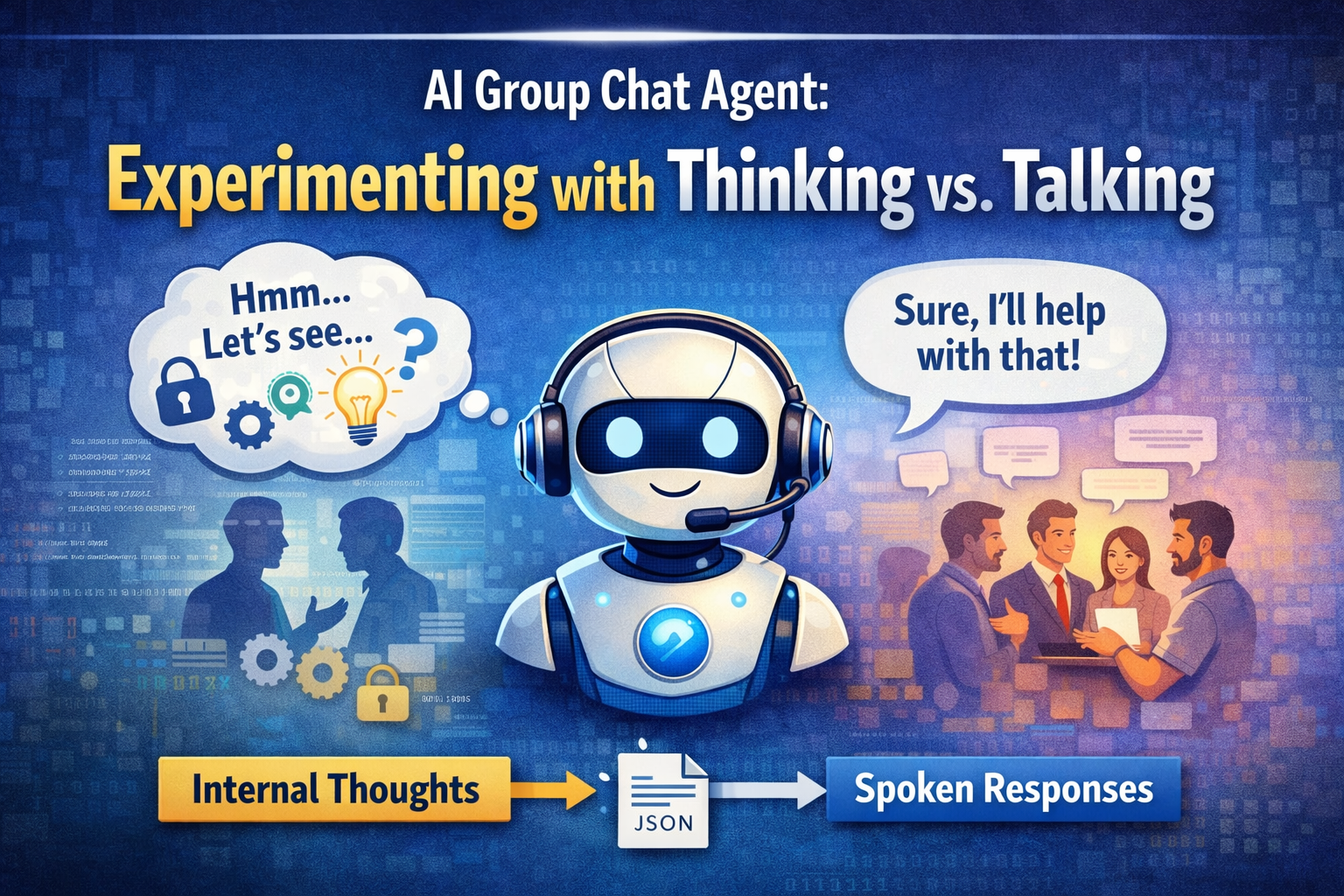

I've been working on a simple but interesting experiment with LLMs - can they actually separate what they're thinking from what they say? This might sound obvious, but it's actually pretty important if we want to build AI agents that understand context and know when to keep information private.

This is part of an ongoing series of experiments published at https://github.com/Gelembjuk/ai-group-chats/ where I'm exploring different aspects of AI agent behavior in group conversations.

For detailed technical documentation, examples, and setup instructions, see the complete guide.