I would like to expose one more benefit of the Model Context Protocol (MCP) — the ability to easily change the transport protocol. There are three different transport protocols available now, and each has its own benefits and drawbacks.

However, if an MCP server is implemented properly using a good SDK, then switching to another transport protocol is easy.

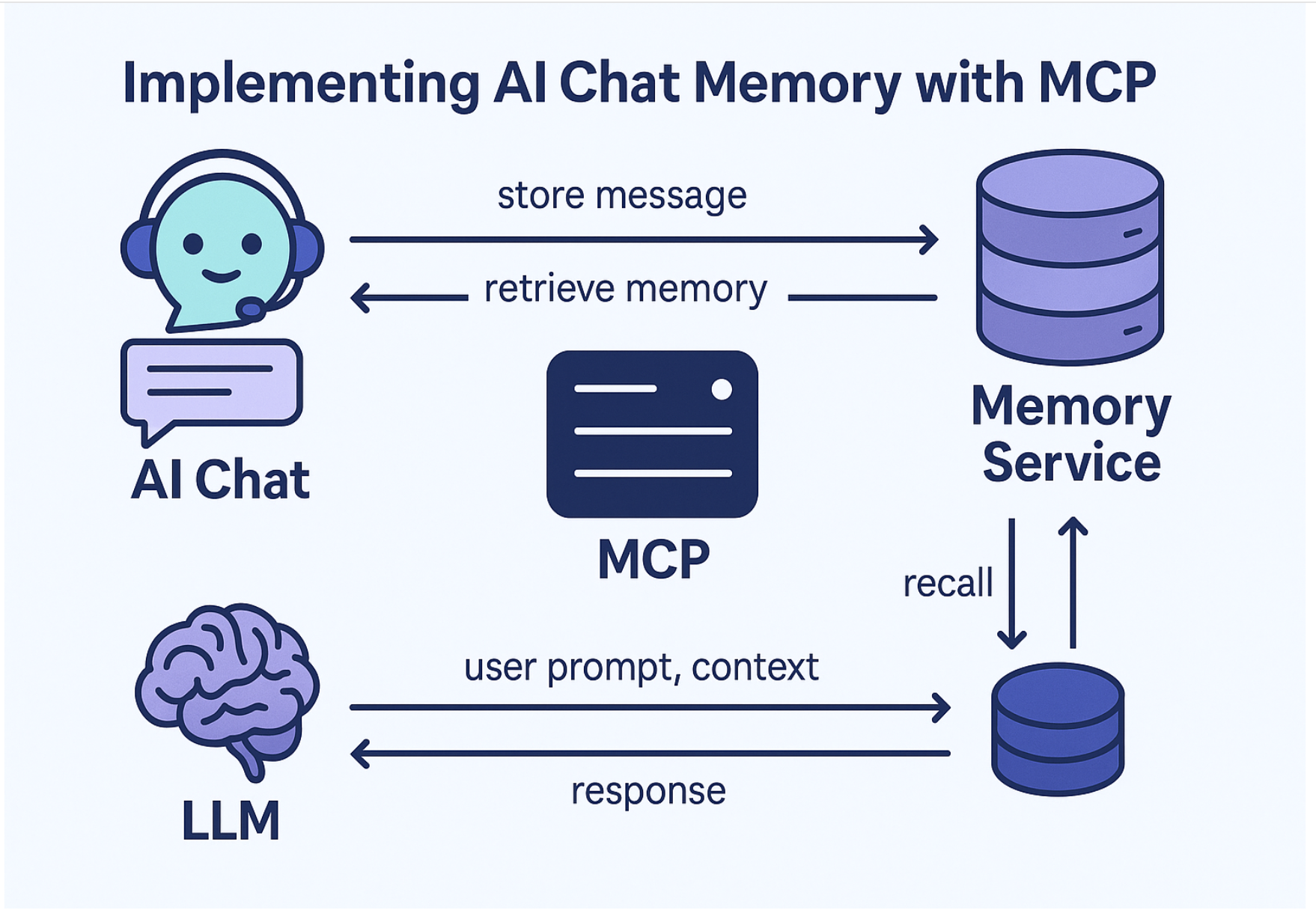

Quick Recap: What is MCP?

- Model Context Protocol (MCP) is a new standard for integrating external tools with AI chat applications. For example, you can add Google Search as an MCP server to Claude Desktop, allowing the LLM to perform live searches to improve its responses. In this case, Claude Desktop is the MCP Host.

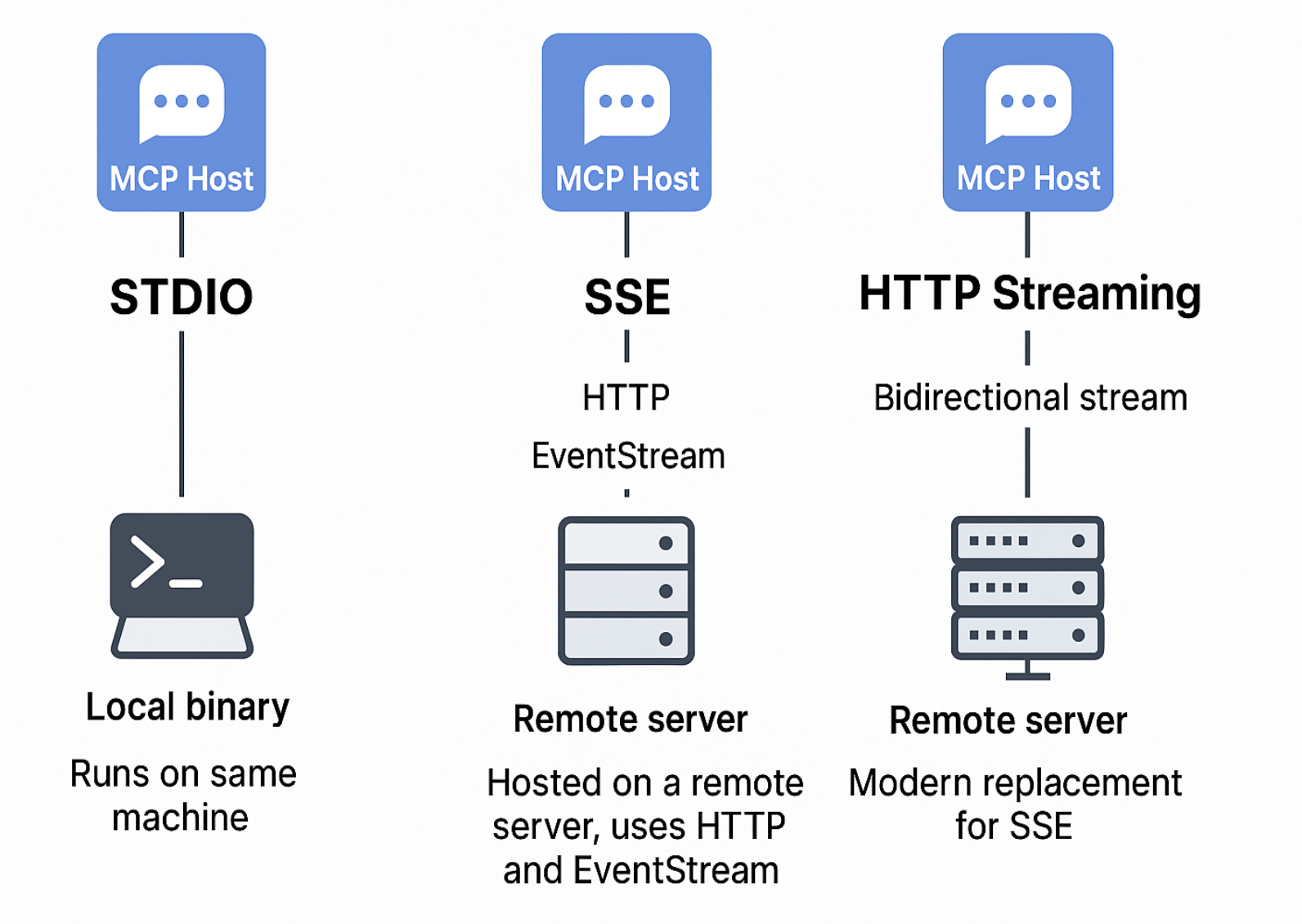

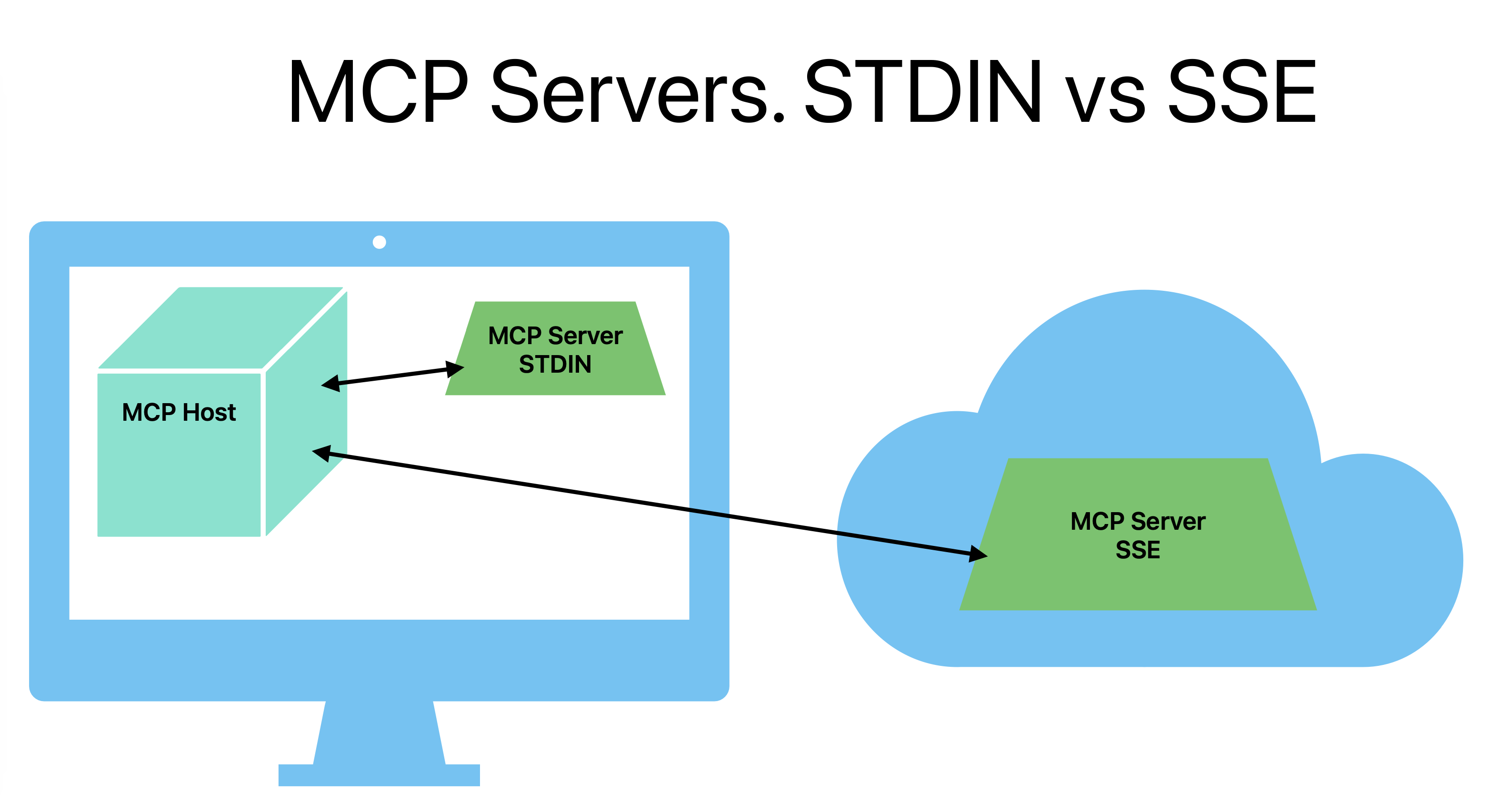

There are three common types of MCP server transports:

-

STDIO Transport: The MCP server runs locally on the same machine as the MCP Host. Users download a small application (the MCP server), install it, and configure the MCP Host to communicate with it via standard input/output.

-

SSE Transport: The MCP server runs as a network service, typically on a remote server (but it can also be on

localhost). It's essentially a special kind of website that the MCP Host connects to via Server-Sent Events (SSE).