Good news! I've extended my lightweight AI orchestrator, CleverChatty, to support Retrieval-Augmented Generation (RAG) by integrating it using the Model Context Protocol (MCP).

Quick Recap

-

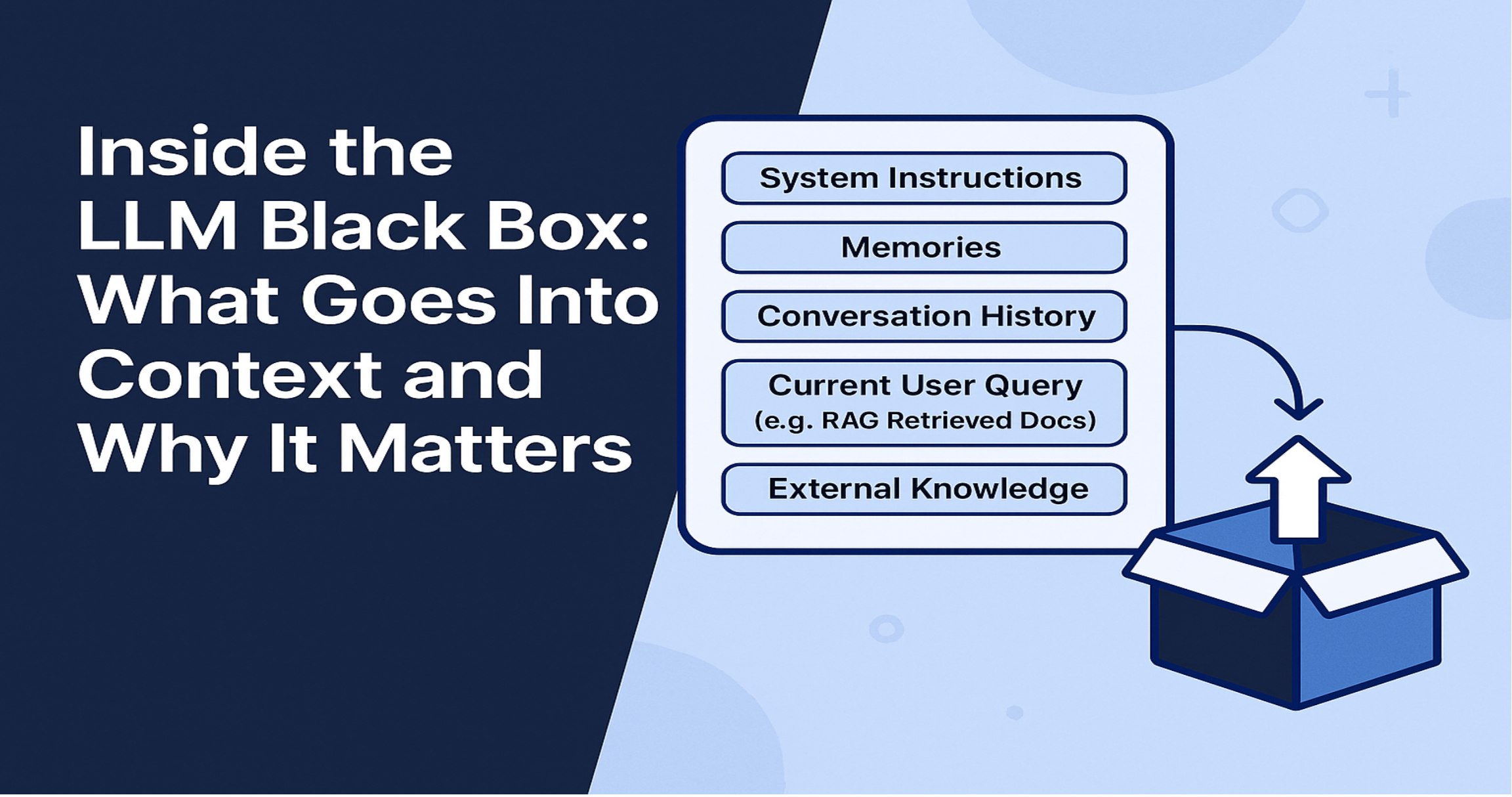

RAG (Retrieval-Augmented Generation) is an AI technique that enhances language models by retrieving relevant external documents (e.g., from databases or vector stores) based on a user’s query. These documents are then used as additional context during response generation, enabling more accurate, up-to-date, and grounded outputs.

-

MCP (Model Context Protocol) is a standard for how external systems—such as tools, memory, or document retrievers—communicate with language models. It enables structured, portable, and extensible context exchange, making it ideal for building complex AI systems like assistants, copilots, or agents.

-

CleverChatty is a simple AI orchestrator that connects LLMs with tools over MCP and supports external memory. My goal is to expand it to work with modern AI infrastructure—RAG, memory, tools, agent-to-agent (A2A) interaction, and beyond. It’s provided as a library, and you can explore it via the CLI interface: CleverChatty CLI.