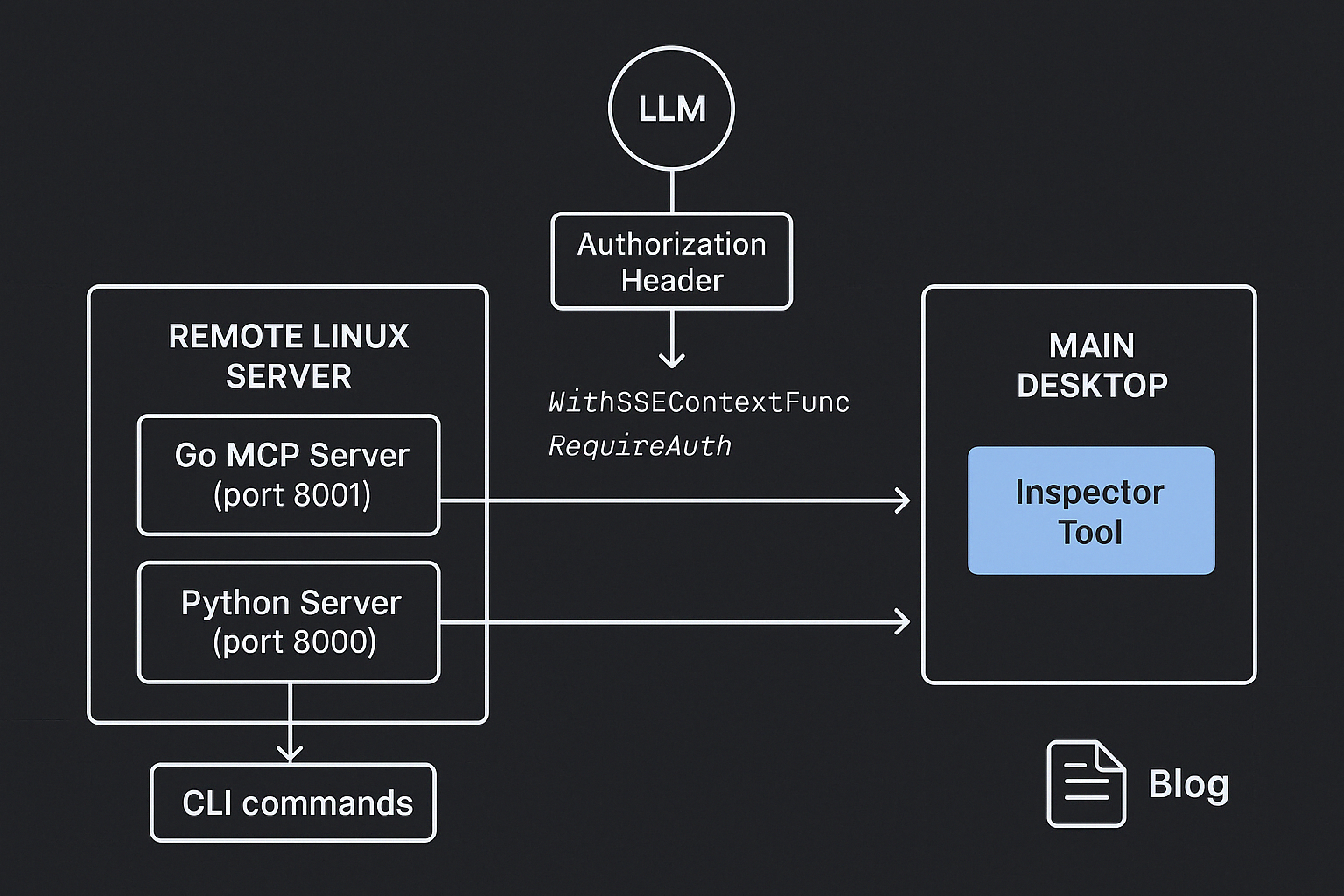

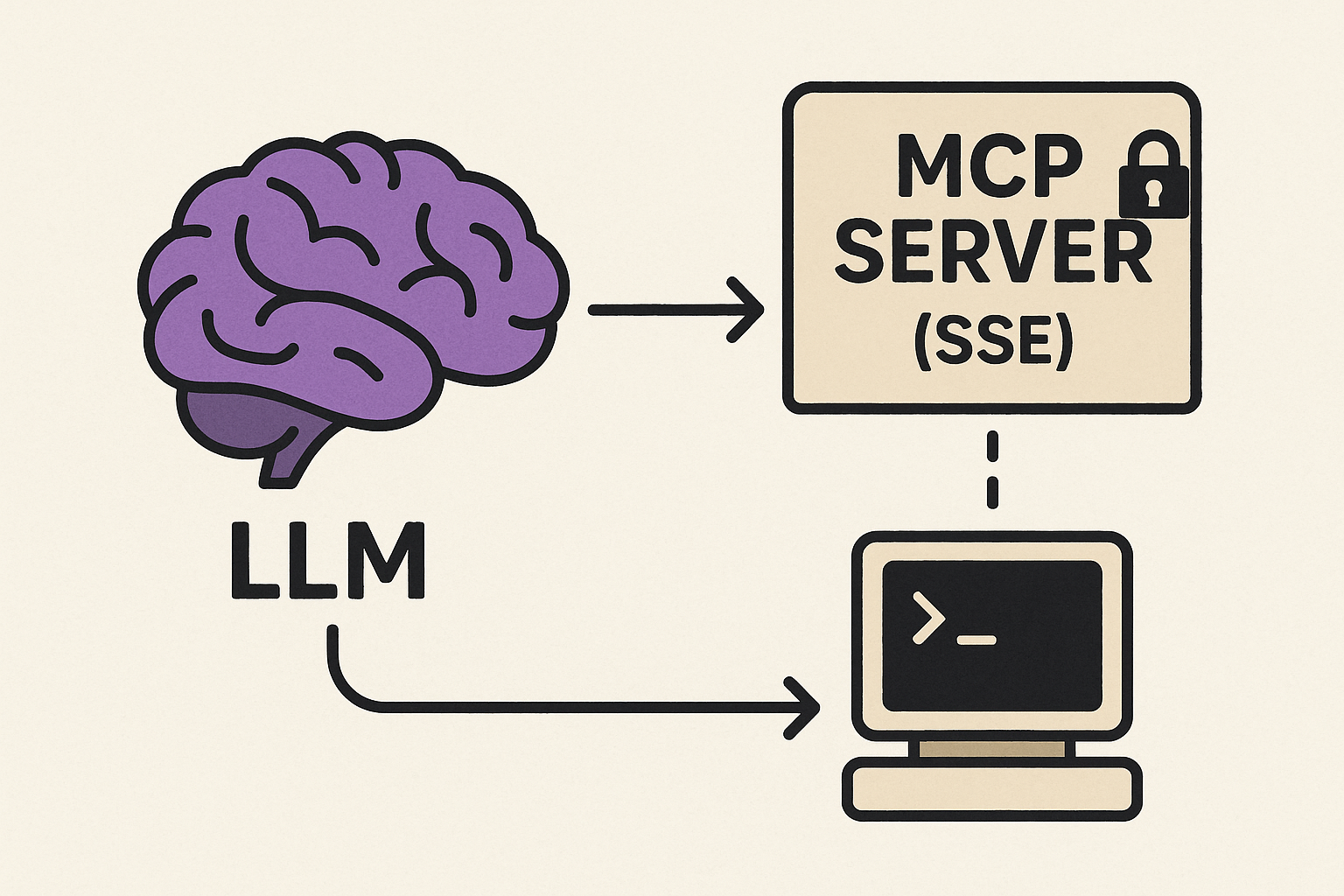

Today, I want to show how Model Context Protocol (MCP) servers using SSE transport can be made secure by adding authentication.

I'll use the Authorization HTTP header to read a Bearer token. Generating the token itself is out of scope for this post, it is same as usual practices for web applications.

To verify how this works, you’ll need an MCP host tool that supports SSE endpoints along with custom headers. Unfortunately, I couldn’t find any AI chat tools that currently support this. For example, Claude Desktop doesn’t, and I haven’t come across any others that do.

However, I’m hopeful that most AI chat tools will start supporting it soon — there’s really no reason not to. By the way, I shared my thoughts on how MCP could transform the web in this post.

For my experiments, I’ve modified the mcphost tool. I’ve submitted a pull request with my changes and hope it gets accepted. For now, I’m using a local modified version. I won’t go into the details here, since the focus is on MCP servers, not clients.